Object Storage on JUDAC

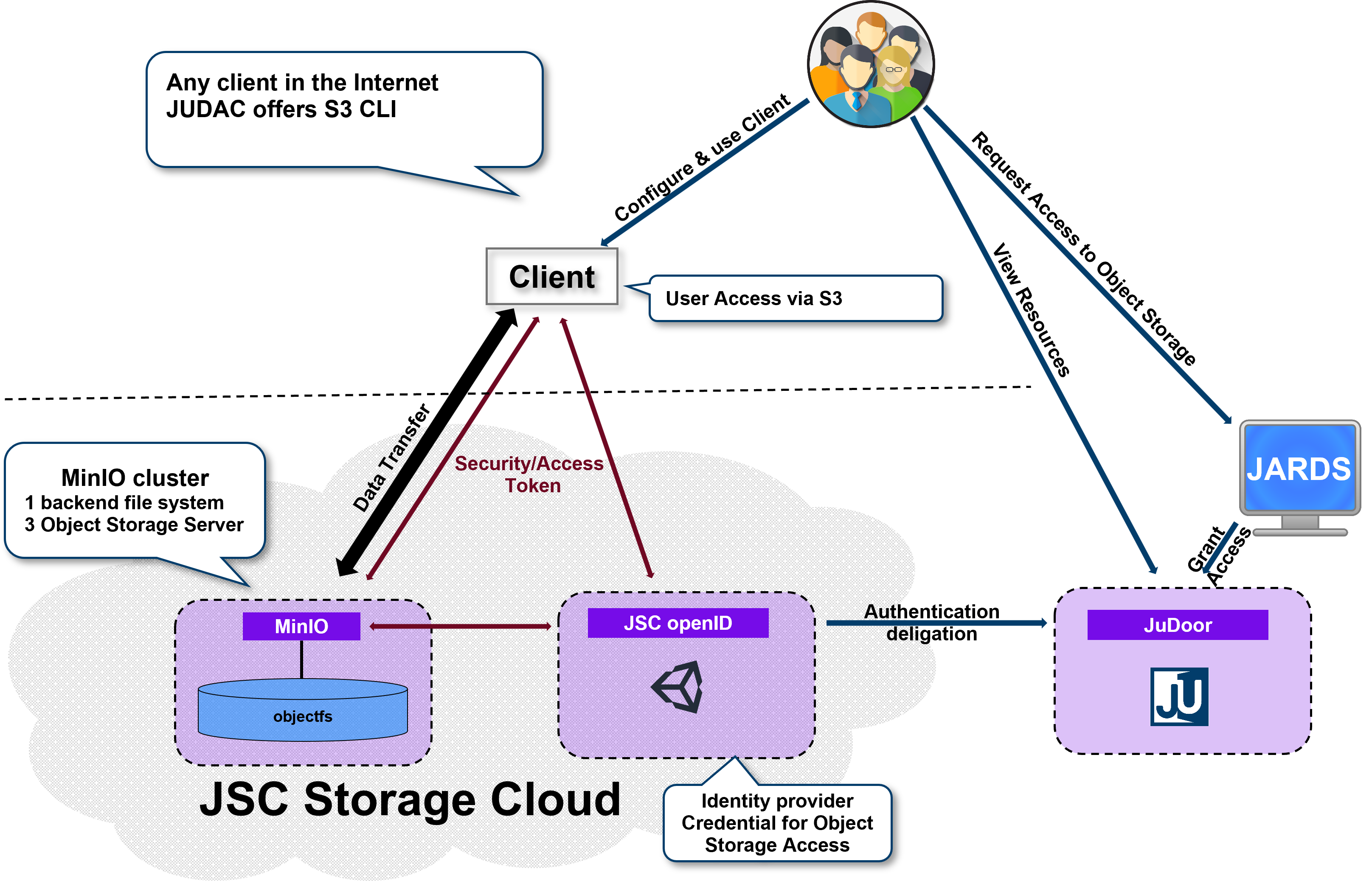

Beside classical POSIX file systems, JUST offers an object storage interface to store, manage, and access large amounts of data via HTTP. Data projects can request access to this resource via the JARDS portal.

The JSC object storage supports only S3 access protocol.

Access endpoint for client authentication is the MinIO service at just-object.fz-juelich.de:9000, which is connected to an JSC openID provider which delegates authentication to JuDoor .

Important

POSIX file systems and object storage data are stored separately, it is not possible to store data via POSIX and access them via the object storage interface or vice versa.

Object Store Token Creation

Note

A user has to be granted access to a projects object storage resource first to get access.

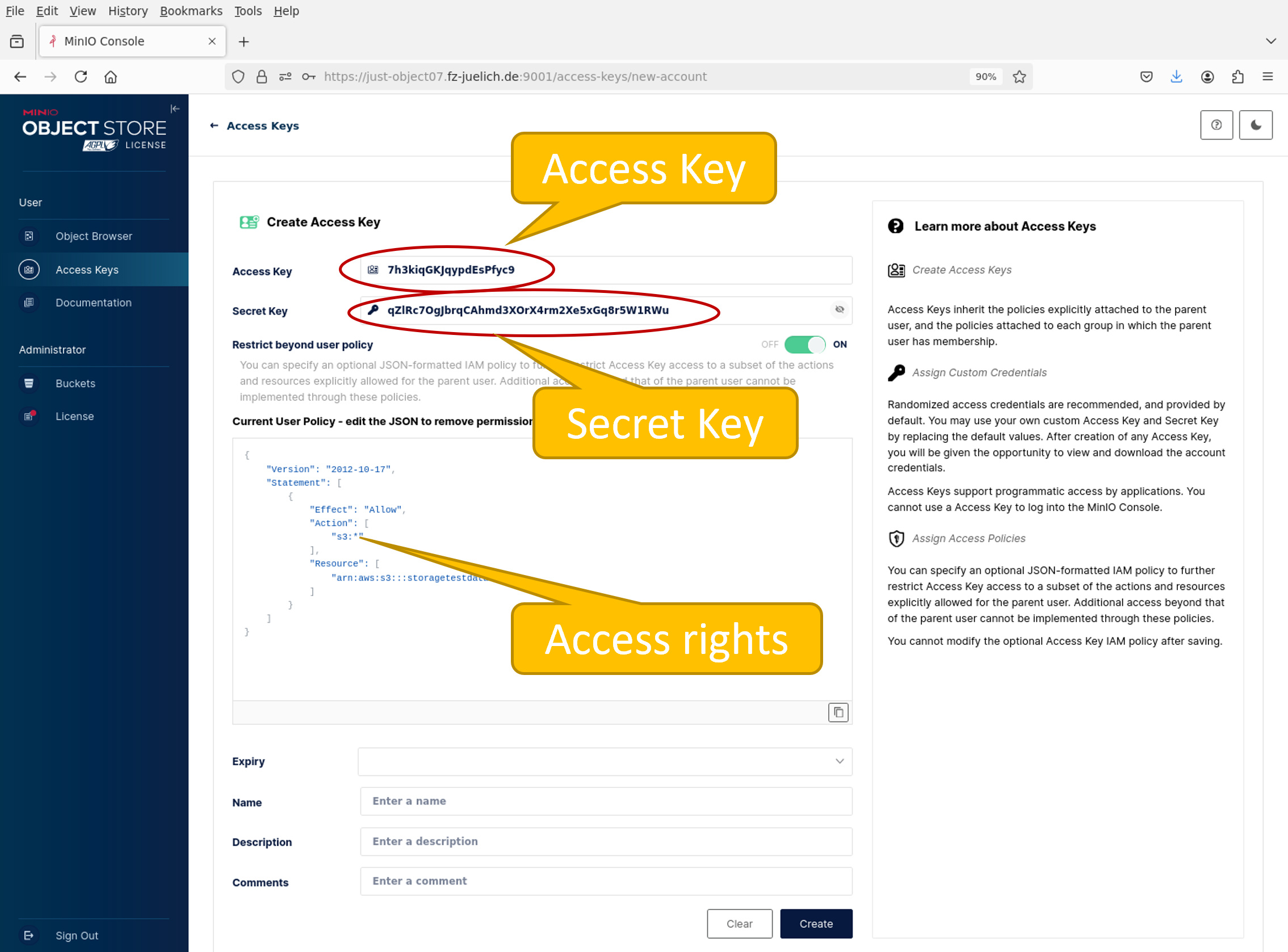

Create access token on our object store web frontend JSC Object Store

Multiple access token can be created for different use cases. For mor information look at S3 Access Policies

Important

The secret is only available at creation time. Store it in a save place.

S3 Client Setup: s3cmd tool

Use s3cmd command with following configuration file (~/.s3cfg)

[default]

access_key=<access key>

check_ssl_certificate = True

check_ssl_hostname = True

host_base = just-object.fz-juelich.de:9000

host_bucket = just-object.fz-juelich.de:9000

human_readable_sizes = True

secret_key=<secret key>

Note

In the transition from the legacy object store to the new object store, the old configuration can be used in parallel to ensure a seamless migration.

For the legacy object store use configuration file ~/.s3cfg.legacy

[default]

access_key=<access key>

check_ssl_certificate = True

check_ssl_hostname = True

host_base = just-object-legacy.fz-juelich.de:8080

host_bucket = just-object-legacy.fz-juelich.de:8080

human_readable_sizes = True

secret_key=<secret key>

signature_v2 = True

s3cmd Examples

@judac$ s3cmd ls

2025-02-04 08:10 s3://storagetestdata

@judac$ s3cmd put /etc/motd s3://storagetestdata

upload: '/etc/motd' -> 's3://storagetestdata/motd' [1 of 1]

1374 of 1374 100% in 0s 237.99 KB/s done

@judac$ s3cmd ls s3://storagetestdata

2025-02-05 07:33 15M s3://storagetestdata/QuickRef4Rechnerfuehrungen.pdf

2025-03-06 16:06 1374 s3://storagetestdata/motd

@judac$ s3cmd get s3://storagetestdata/QuickRef4Rechnerfuehrungen.pdf

download: 's3://storagetestdata/QuickRef4Rechnerfuehrungen.pdf' -> './QuickRef4Rechnerfuehrungen.pdf' [1 of 1]

16048737 of 16048737 100% in 0s 66.10 MB/s done

@judac$ file ./QuickRef4Rechnerfuehrungen.pdf

./QuickRef4Rechnerfuehrungen.pdf: PDF document, version 1.5

@judac$ s3cmd rm s3://storagetestdata/motd

delete: 's3://storagetestdata/motd'

@judac$ s3cmd ls s3://storagetestdata

2025-02-05 07:33 15M s3://storagetestdata/QuickRef4Rechnerfuehrungen.pdf

s3cmd Examples for legacy Object Store

@judac$ s3cmd ls -c ~/.s3cfg.legacy

2009-02-03 16:45 s3://graf1_nd_test

@judac$ s3cmd ls s3://graf1_nd_test -c ~/.s3cfg.legacy

2022-12-01 09:30 29 s3://graf1_nd_test/neu.txt

MinIO Client mc

On the first call of the mc command line client the default configuration will be created in ~/.mc/.

Add JSC Object Store configuration to ~/.mc/config.json:

{

"version": "10",

"aliases": {

"justobject": {

"url": "https://just-object.fz-juelich.de:9000",

"accessKey": "<access key>",

"secretKey": "<secret key>",

"api": "S3v4",

"path": "auto"

},

...

}

}

Note

In the transition from the legacy object store to the new object store, both configuration can be used in parallel to ensure a seamless migration.

{

"version": "10",

"aliases": {

"justobject_legacy": {

"url": "https://just-object-legacy.fz-juelich.de:8080",

"accessKey": "<access key>",

"secretKey": "<secret key>",

"api": "S3v4",

"path": "auto"

},

...

}

}

mc Examples

@judac$ mc ls justobject

[2025-02-04 09:10:57 CET] 0B storagetestdata/

@judac$ mc ls justobject/storagetestdata/

[2025-02-05 08:33:46 CET] 15MiB STANDARD QuickRef4Rechnerfuehrungen.pdf

@judac$ mc put /etc/motd justobject/storagetestdata/

/etc/motd: 1.34 KiB / 1.34 KiB ..... 63.35 KiB/s 0s

@judac$ mc ls justobject/storagetestdata/

[2025-02-05 08:33:46 CET] 15MiB STANDARD QuickRef4Rechnerfuehrungen.pdf

[2025-03-07 15:00:26 CET] 1.3KiB STANDARD motd

@judac$ mc get justobject/storagetestdata/QuickRef4Rechnerfuehrungen.pdf .

...QuickRef4Rechnerfuehrungen.pdf: 15.31 MiB / 15.31 MiB ... 180.73 MiB/s 0s

@judac$ file QuickRef4Rechnerfuehrungen.pdf

QuickRef4Rechnerfuehrungen.pdf: PDF document, version 1.5

Note

SOURCE and TARGET can be different aliases

@judac$ mc ls justobject/storagetestdata/

[2025-02-05 08:33:46 CET] 15MiB STANDARD QuickRef4Rechnerfuehrungen.pdf

[2025-03-07 15:00:26 CET] 1.3KiB STANDARD motd

@judac$ mc ls justobject_legacy/graf1_nd_test/

[2022-12-01 10:30:52 CET] 29B STANDARD neu.txt

@judac$ mc mv justobject_legacy/graf1_nd_test/neu.txt justobject/storagetestdata/

....de:8080/graf1_nd_test/neu.txt: 29 B / 29 B ... 135 B/s 0s

@judac$ mc ls justobject_legacy/graf1_nd_test/

@judac$ mc ls justobject/storagetestdata/

[2025-02-05 08:33:46 CET] 15MiB STANDARD QuickRef4Rechnerfuehrungen.pdf

[2025-03-07 15:00:26 CET] 1.3KiB STANDARD motd

[2025-03-07 15:07:59 CET] 29B STANDARD neu.txt

For more information see: MinIO Client Docs

S3 in Python: boto3 API

As an option you can use the boto3 API to access your data from python scripts:

import boto3

import botocore

#boto3.set_stream_logger(name='botocore') # this enables debug tracing

session = boto3.session.Session()

s3_client = session.client(

service_name='s3',

aws_access_key_id="<ACCESS>",

aws_secret_access_key="<SECRET>",

endpoint_url="https://just-object.fz-juelich.de:9000",

)

buckets = s3_client.list_buckets()

print('Existing buckets:')

for bucket in buckets['Buckets']:

print(f'Bucket: {bucket["Name"]}')

objects = s3_client.list_objects(Bucket=f'{bucket["Name"]}')

for object in objects["Contents"]:

print(f'Object:{object["Key"]}')

For more information see: Boto3 Docs