|

Cube Tools Guide

(CubeLib 4.9.1, revision 76e1f4c2)

Description of Cube Command Line Tools

|

|

Cube Tools Guide

(CubeLib 4.9.1, revision 76e1f4c2)

Description of Cube Command Line Tools

|

CUBE provides a set of various command line tools for different purposes.

As performance tuning of parallel applications usually involves multiple experiments to compare the effects of certain optimization strategies, CUBE offers a mechanism called performance algebra that can be used to merge, subtract, and average the data from different experiments and view the results in the form of a single ``derived'' experiment. Using the same representation for derived experiments and original experiments provides access to the derived behavior based on familiar metaphors and tools in addition to an arbitrary and easy composition of operations. The algebra is an ideal tool to verify and locate performance improvements and degradations likewise. The algebra includes three operators—diff, merge, and mean—provided as command-line utilities which take two or more CUBE files as input and generate another CUBE file as output. The operations are closed in the sense that the operators can be applied to the results of previous operations. Note that although all operators are defined for any valid CUBE data sets, not all possible operations make actually sense. For example, whereas it can be very helpful to compare two versions of the same code, computing the difference between entirely different programs is unlikely to yield any useful results.

Changing a program can alter its performance behavior. Altering the performance behavior means that different results are achieved for different metrics. Some might increase while others might decrease. Some might rise in certain parts of the program only, while they drop off in other parts. Finding the reason for a gain or loss in overall performance often requires considering the performance change as a multidimensional structure. With CUBE's difference operator, a user can view this structure by computing the difference between two experiments and rendering the derived result experiment like an original one. The difference operator takes two experiments and computes a derived experiment whose severity function reflects the difference between the minuend's severity and the subtrahend's severity. Make sure that the two .cube files used for the difference operation must have same number or structure in system tree. Mismatched system hierarchies may lead to incompatible system tree.

The possible output is presented below.

user@host: cube_diff scout.cube remapped.cube -o result.cube

Reading scout.cube ... done.

Reading remapped.cube ... done.

++++++++++++ Diff operation begins ++++++++++++++++++++++++++

INFO::Merging metric dimension... done.

INFO::Merging program dimension... done.

INFO::Merging system dimension... done.

INFO::Mapping severities... done.

INFO::Adding topologies...

Topology retained in experiment.

done.

INFO::Diff operation... done.

++++++++++++ Diff operation ends successfully ++++++++++++++++

Writing result.cube ... done.

Reduce system dimension, if experiments are incompatible.

user@host: cube_diff scout.cubex loop1.cubex -o result.cube Reading scout.cubex ... done. Reading loop1.cubex ... done. ++++++++++++ Diff operation begins ++++++++++++++++++++++++++ INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension...Runtime Error: System tree seems to be incompatible to be unified in one common system tree. You may want to collapse or reduce the system trees.

On the above example, the system hierarchies are mismatched. Therefore execution with '-o' reduce the dimensions of the given .cube file.

user@host: cube_diff -c scout.cubex loop1.cubex -o result.cube Reading scout.cubex ... done. Reading loop1.cubex ... done. ++++++++++++ Diff operation begins ++++++++++++++++++++++++++ INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Merging topologies... done. INFO::Diff operation... done. ++++++++++++ Diff operation ends successfully ++++++++++++++++ Writing result.cube done.

user@host: cube_diff -C scout.cubex loop1.cubex -o result.cube Reading scout.cubex ... done. Reading loop1.cubex ... done. ++++++++++++ Diff operation begins ++++++++++++++++++++++++++ INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Merging topologies... done. INFO::Diff operation... done. ++++++++++++ Diff operation ends successfully ++++++++++++++++ Writing result.cube done.

user@host: cube_diff -h Usage: cube_diff [-o output] [-c|-C] [-h] <minuend> <subtrahend> -o Name of the output file (default: diff) -c Reduce system dimension, if experiments are incompatible. -C Collapse system dimension! Overrides option -c. -h Help; Output a brief help message. Report bugs to <scalasca@fz-juelich.de>

The merge operator's purpose is the integration of performance data from different sources. Often a certain combination of performance metrics cannot be measured during a single run. For example, certain combinations of hardware events cannot be counted simultaneously due to hardware resource limits. Or the combination of performance metrics requires using different monitoring tools that cannot be deployed during the same run. The merge operator takes an arbitrary number of CUBE experiments with a different or overlapping set of metrics and yields a derived CUBE experiment with a joint set of metrics.

The possible output is presented below.

user@host: cube_merge scout.cube remapped.cube -o result.cube

++++++++++++ Merge operation begins ++++++++++++++++++++++++++

Reading scout.cube ... done.

Reading remapped.cube ... done.

INFO::Merging metric dimension... done.

INFO::Merging program dimension... done.

INFO::Merging system dimension... done.

INFO::Mapping severities... done.

INFO::Merge operation...

Topology retained in experiment.

Topology retained in experiment.

done.

++++++++++++ Merge operation ends successfully ++++++++++++++++

Writing result.cube ... done.

The mean operator is intended to smooth the effects of random errors introduced by unrelated system activity during an experiment or to summarize across a range of execution parameters. You can conduct several experiments and create a single average experiment from the whole series. The mean operator takes an arbitrary number of arguments.

The possible output is presented below.

user@host: cube_mean scout1.cube scout2.cube scout3.cube scout4.cube -o mean.cube ++++++++++++ Mean operation begins ++++++++++++++++++++++++++ Reading scout1.cube ... done. INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Adding topologies... done. INFO::Mean operation... done. Reading scout2.cube ... done. INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Adding topologies... done. INFO::Mean operation... done. Reading scout3.cube ... done. INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Adding topologies... done. INFO::Mean operation... done. Reading scout4.cube ... done. INFO::Merging metric dimension... done. INFO::Merging program dimension... done. INFO::Merging system dimension... done. INFO::Mapping severities... done. INFO::Adding topologies... done. INFO::Mean operation... done. ++++++++++++ Mean operation ends successfully ++++++++++++++++ Writing mean.cube ... done.

Compares two experiments and prints out if they are equal or not. Two experiments are equal if they have same dimensions hierarchy and the equal values of the severities.

An example of the output is below.

user@host: cube_cmp heatmap.cubex heatmap2.cubex Reading heatmap.cubex ... done. Reading heatmap2.cubex ... done. ++++++++++++ Compare operation begins ++++++++++++++++++++++++++ Compare metric dimensions...equal. Compare calltree dimensions.equal. Compare system dimensions...equal. Compare data...equal. Experiments are equal. +++++++++++++ Compare operation ends successfully ++++++++++++++++

user@host: cube_cmp remapped.cube scout1.cube Reading remapped.cube ... done. Reading scout1.cube ... done. ++++++++++++ Compare operation begins ++++++++++++++++++++++++++ Experiments are not equal. +++++++++++++ Compare operation ends successfully ++++++++++++++++

CUBE provides a command line tool cube_pop_metrics to calculate various POP metrics. Attempting to optimize the performance of a parallel code can be a daunting task, and often it is difficult to know where to start. For example, we might ask if the way computational work is divided is a problem? Or perhaps the chosen communication scheme is inefficient? Or does something else impact performance? To help address this issue, POP has defined a methodology for analysis of parallel codes to provide a quantitative way of measuring relative impact of the different factors inherent in parallelization. This article introduces these metrics, explains their meaning, and provides insight into the thinking behind them.

Various POP models are supported:

The possible output is presented below.

user@host: cube_pop_metrics -a mpi example2D.cube.gz

Reading example2D.cube.gz ... done.

Calculating

.................

-------------- Result --------------

example2D.cube.gz -> Profile 0

Only-MPI Assessment

------------------------------------

Calculate for "driver[id=0]"

------------------------------------

POP Metric Profile 0

------------------------------------

Parallel Efficiency 0.715238

* Load Balance Efficiency 0.960990

* Communication Efficiency 0.744273

* * Serialisation Efficiency 0.815051

* * Transfer Efficiency 0.913160

------------------------------------

GPU Metric

------------------------------------

GPU Parallel Efficiency nan

* GPU Load Balance Efficiency nan

* GPU Communication Efficienc nan

------------------------------------

IO Metric

------------------------------------

I/O Efficiency nan

* Posix I/O time 0.000000

* MPI I/O time nan

------------------------------------

Additional Metrics

------------------------------------

Resource stall cycles nan

IPC nan

Instructions (only computation nan

Computation time 5336437.624951

GPU Computation time nan

------------------------------------

FOA Quality control Metrics

------------------------------------

Wall-clock time; min 454.985888, 0.587015%

avg 457.672492

max 482.150315, 5.348327%

Help; Output a long detailed help message.

A feature of the methodology is, that it uses a hierarchy of Only-MPI Assessment, each metric reflecting a common cause of inefficiency in parallel programs. These metrics then allow a comparison of the parallel performance (e.g. over a range of thread/process counts, across different machines, or at different stages of optimization and tuning) to identify which characteristics of the code contribute to the inefficiency.

The first step to calculating these metrics is to use a suitable tool (e.g. Score-P or Extrae) to generate trace data whilst the code is executed. The traces contain information about the state of the code at a particular time, e.g. it is in a communication routine or doing useful computation, and also contains values from processor hardware counters, e.g. number of instructions executed, number of cycles.

The Only-MPI Assessment are then calculated as efficiencies between 0 and 1, with higher numbers being better. In general, we regard efficiencies above 0.8 as acceptable, whereas lower values indicate performance issues that need to be explored in detail. The ultimate goal then for POP is rectifying these underlying issues by the user. Please note, that Only-MPI Assessment can be computed only for inclusive callpaths, as they are less meaningful for exclusive callpaths. Furthermore, Only-MPI Assessment are not available in "Flat view" mode.

The approach outlined here is applicable to various parallelism paradigms, however for simplicity the Only-MPI Assessment presented here are formulated in terms of a distributed-memory message-passing environment, e.g., MPI. For this the following values are calculated for each process from the trace data: time doing useful computation, time in communication, number of instructions & cycles during useful computation. Useful computation excludes time within the overhead of parallel paradigms (Computation time).

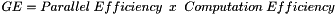

At the top of the hierarchy is Global Efficiency (GE), which we use to judge overall quality of parallelization. Typically, inefficiencies in parallel code have two main sources:

and to reflect this we define two sub-metrics to measure these two inefficiencies. These are the Parallel Efficiency and the Computation Efficiency, and our top-level GE metric is the product of these two sub-metrics:

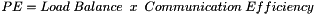

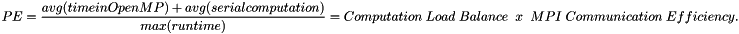

Parallel Efficiency (PE)reveals the inefficiency in splitting computation over processes and then communicating data between processes. As with GE, PE is a compound metric whose components reflects two important factors in achieving good parallel performance in code:

These are measured with Load Balance Efficiency and Communication Efficiency, and PE is defined as the product of these two sub-metrics:

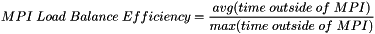

Load Balance Efficiency can be computed as follows:

Thus it shows how big is a difference between average and maximal computation.

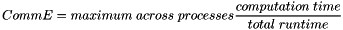

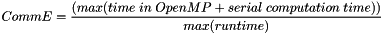

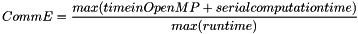

Communication Efficiency (CommE) is the maximum across all processes of the ratio between useful computation time and total run-time:

CommE identifies when code is inefficient because it spends a large amount of time communicating rather than performing useful computations.

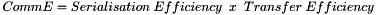

CommE is composed of two additional metrics that reflect two causes of excessive time within communication:

These are measured using Serialisation Efficiency and Transfer Efficiency.

Combination of these two sub-metrics gives us Communication Efficiency:

To obtain these two sub-metrics we need to perform Scalasca trace analysis which identifies serialisations and inefficient communication patterns.

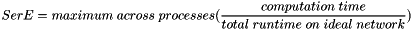

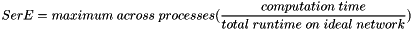

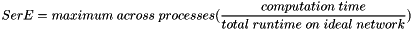

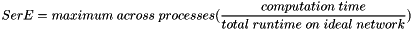

Serialisation Efficiency (SerE) measures inefficiency due to idle time within communications, i.e. time where no data is transferred, and is expressed as:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

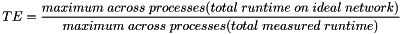

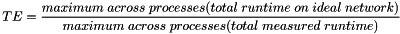

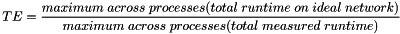

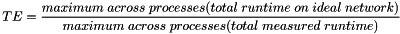

Transfer Efficiency (TE) measures inefficiencies due to time spent in data transfers:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

This is one approach to extend POP metrics (see: Only MPI analysis ) for hybrid (MPI+OpenMP) applications. In this approach Parallel Efficiency split into two components:

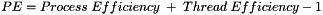

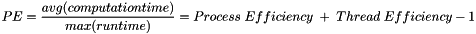

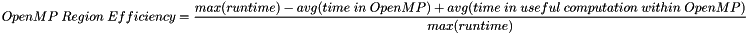

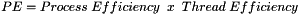

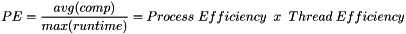

Parallel Efficiency (PE) reveals the inefficiency in processes and threads utilization. These are measured with Process Efficiency and Thread Efficiency, and PE can be computed directly or as a sum of these two sub-metrics minus one:

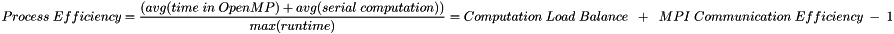

Process Efficiency completely ignores thread behavior, and evaluates process utilization via two components:

These two can be measured with Computation Load Balance and Communication Efficiency respectively. Process Efficiency can be computed directly or as a sum of these two sub-metrics minus one:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

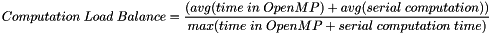

Computation Load Balance can be evaluated directly by following formula:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

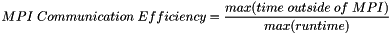

MPI Communication Efficiency (CommE) can be evaluated directly by following formula:

CommE identifies when code is inefficient because it spends a large amount of time communicating rather than performing useful computations. CommE is composed of two additional metrics that reflect two causes of excessive time within communication:

These are measured using Serialisation Efficiency and Transfer Efficiency. Combination of these two sub-metrics gives us Communication Efficiency:

To obtain these two sub-metrics we need to perform Scalasca trace analysis which identifies serialisations and inefficient communication patterns.

Serialisation Efficiency (SerE) measures inefficiency due to idle time within communications, i.e. time where no data is transferred, and is expressed as:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

Transfer Efficiency (TE) measures inefficiencies due to time spent in data transfers:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

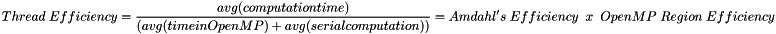

Thread Efficiency considers two sources of inefficiency:

These two can be measured with Amdahl's Efficeincy and OpenMP region Efficiency respectively. Thread Efficeincy can be computed directly or as a sum of these two sub-metrics minus one:

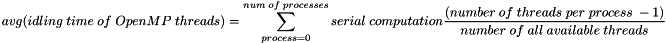

Where average idling time of OpenMP threads considers that threads are idling if only master thread is working and can be computed by following formula

Moreover, average time in OpenMP computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP can be computed as ordinary arithmetic mean.

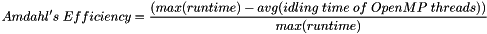

Amdahl's Efficiency indicates serial computation and can be computed as follows:

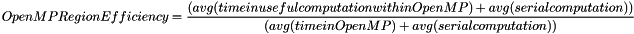

OpenMP Region Efficiency indicates inefficiencies within threads, and can be computed as follows:

Where average time in OpenMP is computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP can be computed as ordinary arithmetic mean.

This is one approach to extend POP metrics (see: Only MPI analysis ) for hybrid (MPI+OpenMP) applications. In this approach Parallel Efficiency split into two components:

Parallel Efficiency (PE) reveals the inefficiency in processes and threads utilization. These are measured with Process Efficiency and Thread Efficiency, and PE can be computed directly or as a product of these two sub-metrics:

Process Efficiency completely ignores thread behavior, and evaluates process utilization via two components:

These two can be measured with Computation Load Balance and Communication Efficiency respectively. Process Efficiency can be computed directly or as a product of these two sub-metrics:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

Computation Load Balance can be evaluated directly by following formula:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

MPI Communication Efficiency (CommE) can be evaluated directly by following formula:

CommE identifies when code is inefficient because it spends a large amount of time communicating rather than performing useful computations. CommE is composed of two additional metrics that reflect two causes of excessive time within communication:

These are measured using Serialisation Efficiency and Transfer Efficiency. Combination of these two sub-metrics gives us Communication Efficiency:

To obtain these two sub-metrics we need to perform Scalasca trace analysis which identifies serialisations and inefficient communication patterns.

Serialisation Efficiency (SerE) measures inefficiency due to idle time within communications, i.e. time where no data is transferred, and is expressed as:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

Transfer Efficiency (TE) measures inefficiencies due to time spent in data transfers:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

Thread Efficiency considers two sources of inefficiency:

These two can be measured with Amdahl's Efficeincy and OpenMP region Efficiency respectively. Thread Efficeincy can be computed directly or as a product of these two sub-metrics:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

Thread Efficiency considers two sources of inefficiency:

These two can be measured with Amdahl's Efficeincy and OpenMP region Efficiency respectively. Thread Efficeincy can be computed directly or as a product of these two sub-metrics:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

OpenMP Region Efficiency indicates inefficiencies within threads, and can be computed as follows:

Where average time in OpenMP and average serial computation are computed as weighted arithmetic mean. If number of threads is equal across processes average time in OpenMP and average serial computation can be computed as ordinary arithmetic mean.

This is one approach to extend POP metrics (see: Only MPI analysis ) for hybrid (MPI+OpenMP) applications. In this approach Parallel Efficiency split into two components:

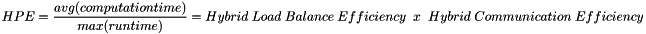

Hybrid Parallel Efficiency (HPE) reveals the inefficiency in processes and threads utilization. These are measured with Hybrid Load Balance Efficiency and Hybrid Communication Efficiency, and HPE can be computed directly or as a product of these two sub-metrics:

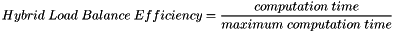

Hybrid Load Balance Efficiency can be computed as follows:

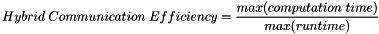

Hybrid Communication Efficiency can be evaluated directly by following formula

This metric identifies when code is inefficient because it spends a large amount of time communicating rather than performing useful computations.

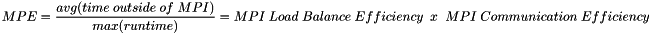

MPI Parallel Efficiency (MPE) reveals the inefficiency in MPI processes. MPE can be computed directly or as a product of MPI Load Balance Efficiency and MPI Communication Efficiency :

MPI Load Balance Efficiency can be computed as follows:

MPI Communication Efficiency can be evaluated directly by following formula:

This metric identifies when code is inefficient because it spends a large amount of time communicating rather than performing useful computations.

Serialisation Efficiency (SerE) measures inefficiency due to idle time within communications, i.e. time where no data is transferred, and is expressed as:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

Transfer Efficiency (TE) measures inefficiencies due to time spent in data transfers:

where total run-time on ideal network is a runtime without detected by Scalasca waiting time and MPI I/O time.

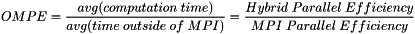

OpenMP Parallel Efficiency (OMPE) reveals the inefficiency in OpenMP threads. OMPE can be computed directly or as a division Hybrid Parallel Efficiency by MPI Parallel Efficiency:

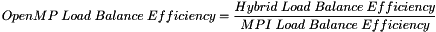

OpenMP Load Balance Efficiency can be computed as follows:

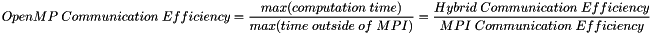

OpenMP Communication Efficiency can be evaluated directly by following formula:

cube_pop_metrics tool provides a series of additinal metrics, which help to make better conclusion about the performance of the code

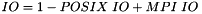

I/O Efficiency is an additional metric, indicating amount of time spend not in a useful computation, but in the processing of IO operations. IO Efficiency can be split into two components:

In this analysis IO an be computed as a sum of these two sub-metrics minus one:

, where 1 would indicate absence of any I/O operation ( perfect usage of I/O is no I/O)

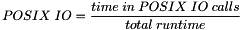

POSIX IO Efficiency shows the fraction of execution time spent in POSIX IO calls In this analysis POSIX IO is computed as :

MPI IO Efficiency shows the fraction of execution time spent in MPI IO calls In this analysis MPI IO is computed as :

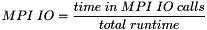

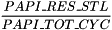

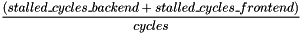

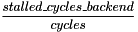

Stalled resources indicates the fraction of computational cycles in user code that the processor has been waiting for some resources. If PAPI counters are available, use

. Otherwise, if Perf counters are available, use one of

depending on what is available.

IPC Efficiency compares IPC to the reference, where lower values indicate that rate of computation has slowed. Typical causes for this include decreasing cache hit rate and exhaustion of memory bandwidth, these can leave processes stalled and waiting for data.

Instruction Efficiency is the ratio of total number of useful instructions for a reference case (e.g. 1 processor) compared to values when increasing the numbers of processes. A decrease in Instruction Efficiency corresponds to an increase in the total number of instructions required to solve a computational problem.

Computation time shows the time spent in the part of the code, identified as a useful computation. Computation time is defined in form of exclusion, namely, computation time is part of the execution time spend NOT in MPI, NOT in OpenMP, NOT in IO, not in SHMEM, not in service libraries, insturmented using the library wrapping, NOT in CUDA, NOT OpenCL, NOT in OpenACC, NOT in OpenCL and so on.

GPU Computation time shows the time spent in the part of the code, identified as a useful computation and executed on GPU.

WallClock time metric is used to control the quality of the measurement and it displays :

across the system tree.

By inspecting this metric the user has an opportunity to estimate weather the measurement is made properly to be suitable for the POP analysis. If the measurement is very skewed (outliners, too wide range in the times from CPU to CPU, and similar) most likely is this measurement has been made errorneously and is not suitable for the analysis. In this case, it is advisable to redo the measurement.

CUBE provides an universal flexible tool, which allows to transform metric tree of an input .cubex into an arbitrary metric hierarchy according to user defined flexible rules. This can be used by performance analysis tools, such as Score-P or Scalasca. In particularly one classifies different call paths according to some user defined rules.

As well one can use this tool to convert derived metrics into the data (if possible) or to add an additional metric into the current .cubex file.

During this processing step the remapper will also create topologies that can be constructed based on system tree information. For this the remapper will use reasonable default settings, for more manual control over topologies the Topology Assistant tool can be used.

The main idea behind the working way of the remapping tool is simple: user formulates the target hierarchy of the metrics in an external file (.spec) and defines rules how they are calculated using CubePL syntax. Remapping tool creates then an output cube with this metric hierarchy.

In particularly remapping tool performs following steps:

-c specified, remapping tool works in "Copy" mode: it creates output cube with identical metrics hierarhy. One combines it usual with the options -d and -s. Otherwise... -r omit, remapping tool looks for the .spec file within the input .cubex file. Score-P starting with version v5 stores its .spec file within profile.cubex. Otherwise... -r specified, remapping tools looks for .spec file and creates the new (working) cube object with the metric hierarchy as metric dimension specified in .spec file -s specified, tool adds additional SCALASCA metrics "Idle threads" and "Limited parallelism" -d is omit, remapping tool saves created cube on disk as it is. All derived metrics in .spec file will stay as derived metrics and will be executed only while working with the stored .cubex file in GUI or with command-line tools. -d, the second phase of the remapping is executed: calculation of derived metrics and converting them into the data. Note, that no all metrics are convertible (such as rates or similar) into data and should stay derived in the target file. To do so remapping tool creates an additional cube object with the identical structure (only convertible derived metrics are turned into the regular data metrics) of the intermediate working cube and copies metric-by-metric its data. As result, derived metrics are calculated and their value is stored as a data into the target cube. As one can see user can use remapping tool for different purposes:

./cube_remap2 -d <input>

Examples for .spec files are shipped with the CUBE package ans stored in the directory [prefix]/share/doc/cubelib/examples

Here we provide some commented examples of .spec files.

In first case we add an additional metric IPC into the cube file

Here the whole hierarchy consist of only one metric IPC, which is "non convertible" into data as it is a POSTDERIVED metric and usual aggregation rules are not applicable. This metric has a data type FLOAT and requires, that input file contains metrics PAPI_TOT_CYC and PAPI_TOT_INS. Otherwise this metric will be always evaluated to 0.

In second case we add two sub metrics to the metric "Time", in order to classify time spend in "MPI" regions and "Other"

Here target hierarchy consist of the top metric "Time" and two sub metrics "MPI regions" and "Other regions". Clearly that they both will sum up to the total "Time" metric. Hence one expects that expanded metric "Time" will be always zero. Note that sub metrics are defined as PREDERIVED_EXCLUSIVE as their value is valid only for the one call path and not their children.

As remapping process is defined in very general way, naive approach in .spec file formulation might take significant calculation time. Using advanced technics in CubePL one can optimize remapping process. For example one can:

Great example of usage of the optimization technics are the .spec files provided with Score-P or Scalasca.

Internal computation is performed asynchronously via tasking paradigm. To control level of concurrency one can set the environment variable CUBE_MAX_CONCURRENCY. Possible values are

Value larget than #cores will lead to oversubscription of the CPUs and might lead to regression of the performance.

Besides of the remapping utility and the algebra tools CUBE provides various tool for different testing, checking, inspection tasks.

This tool starts a server and provide cube reports "as they are" for clients without needing to copy them to the client machine. The cube_server performs also all required calculations, so one uses computational power of the HPC system and not of the client system (which might be unsufficient).

user@host: cube_server [12044] Cube Server(<name>): CubeLib-4.7.0 (e15c4008) [POSIX]

Many hosts don't allow ports to be accessed from the outside. You may use SSH tunneling (also referred to as SSH port forwarding) to route the local network traffic throught SSH to the remote host.

In the following example, cube_server is started with the default port 3300 on the remote server server.example.com. The traffic, which is sent to localhost:3000, will be forwarded to server.example.com on the same port.

If <name> is omit, the name is either generated by the cube_server or value of the environment variable CUBE_SERVER_NAME is taken.

Usually cube_server runs infinitely long, till user kills the process (Ctrl^C or command 'kill'). If one starts the cube_server with the command line option "-c", one connection is served and if it is disconnected, cube_server finishes.

[client]$ ssh -L 3300:server.example.com:3300 server.example.com [server.example.com]$ cube_server Cube Server: CubeLib-4.7.0 (external) [POSIX] cube_server[5247] Waiting for connections on port 3300.

This tool performs series of checks to confirm that stored data has a semantic sense, e.g. "non-negative time" and similar. It is being used as a correctness tool for the Score-P and Scalasca measurements.

user@host: cube_sanity profile.cubex

Name not empty or UNKNOWN ... 71 / 71 OK

No ANONYMOUS functions ... 71 / 71 OK

No TRUNCATED functions ... 71 / 71 OK

File name not empty ... 71 / 71 OK

Proper line numbers ... 0 / 71 OK

No TRACING outside Init and Finalize ... 123 / 123 OK

No negative inclusive metrics ... 409344 / 440832 OK

No negative exclusive metrics ... 409344 / 440832 OK

!!TODO: do we need "which does nothing" here!! One small tool, which does nothing but adding an additional user-defined derived metric into the input .cubex file. Functionality of this tool is provided by remapping tool Cube Remapping tool tool as well, but from historical reasons we still deliver this tool.

user@host: cube_derive -t postderived -r exclusive -e "metric::time()/metric::visits(e)" -p root kenobi profile.cubex -o result.cubex

user@host: cube_dump -m kenobi -c 0 -t aggr -z incl result.cubex

===================== DATA ===================

Print out the data of the metric kenobi

All threads

-------------------------------------------------------------------------------

MAIN__(id=0) 1389.06 A small tool to inspect aggregated values in call tree of a specific metric. Functionality of this tool is reproduced in one ot another way by cube_dump Dump utiluty.

user@host:cube_calltree -m time -a -t 1 -i -p -c ~/Studies/Cube/Adviser/4n_256_/scorep_casino_1n_256x1_sum_br/profile.cubex Reading /home/zam/psaviank/Studies/Cube/Adviser/4n_256_/scorep_casino_1n_256x1_sum_br/profile.cubex... done. 355599 (100%) MAIN__ USR:/MAIN__ 353588 (99.435%) + monte_carlo_ USR:/MAIN__/monte_carlo_ 8941.95 (2.515%) | + monte_carlo_IP_read_wave_function_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_read_wave_function_ 5980.68 (1.682%) | | + MPI_Bcast USR:/MAIN__/monte_carlo_/monte_carlo_IP_read_wave_function_/MPI_Bcast 343175 (96.506%) | + monte_carlo_IP_run_dmc_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_ 4657.85 (1.31%) | | + MPI_Ssend USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/MPI_Ssend 303193 (85.263%) | | + dmcdmc_main.move_config_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_ 21668.4 (6.093%) | | | + wfn_utils.wfn_loggrad_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/wfn_utils.wfn_loggrad_ 19722.3 (5.546%) | | | | + pjastrow.oneelec_jastrow_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/wfn_utils.wfn_loggrad_/pjastrow.oneelec_jastrow_ 28878.5 (8.121%) | | | + wfn_utils.wfn_ratio_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/wfn_utils.wfn_ratio_ 6907.92 (1.943%) | | | | + slater.wfn_ratio_slater_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/wfn_utils.wfn_ratio_/slater.wfn_ratio_slater_ 19721.7 (5.546%) | | | | + pjastrow.oneelec_jastrow_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/wfn_utils.wfn_ratio_/pjastrow.oneelec_jastrow_ 241626 (67.949%) | | | + energy_utils.eval_local_energy_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_ 20785.6 (5.845%) | | | | + wfn_utils.wfn_loggrad_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/wfn_utils.wfn_loggrad_ 20298.1 (5.708%) | | | | | + pjastrow.oneelec_jastrow_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/wfn_utils.wfn_loggrad_/pjastrow.oneelec_jastrow_ 211484 (59.473%) | | | | + non_local.calc_nl_projection_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/non_local.calc_nl_projection_ 208516 (58.638%) | | | | | + wfn_utils.wfn_ratio_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/non_local.calc_nl_projection_/wfn_utils.wfn_ratio_ 8588.87 (2.415%) | | | | | | + slater.wfn_ratio_slater_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/non_local.calc_nl_projection_/wfn_utils.wfn_ratio_/slater.wfn_ratio_slater_ 176779 (49.713%) | | | | | | + pjastrow.oneelec_jastrow_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/non_local.calc_nl_projection_/wfn_utils.wfn_ratio_/pjastrow.oneelec_jastrow_ 14252.4 (4.008%) | | | | | | + scratch.get_eevecs1_ch_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/dmcdmc_main.move_config_/energy_utils.eval_local_energy_/non_local.calc_nl_projection_/wfn_utils.wfn_ratio_/scratch.get_eevecs1_ch_ 34390.8 (9.671%) | | + parallel.qmpi_reduce_d1_ USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/parallel.qmpi_reduce_d1_ 34384.3 (9.669%) | | | + MPI_Reduce USR:/MAIN__/monte_carlo_/monte_carlo_IP_run_dmc_/parallel.qmpi_reduce_d1_/MPI_Reduce

Is one interested on performance profile of a PART of the system, e.g. only nodeplane 0, or ranks from 0 to N, one can set other locations to VOID and create a .cubex file, which has only data for the remaining part.

Partition some of system tree (processes) and void the remainder

user@host:cube_part -R 0-3,5 profile.cubex Open the cube profile.cubex...done. Copy the cube object...done. ++++++++++++ Part operation begins ++++++++++++++++++++++++++ Voiding 251 of 256 processes. ++++++++++++ Part operation ends successfully ++++++++++++++++ Writing part ... done.

!!TODO Can we add a line what parameters(metrics) can be used? like 'cube_info -l' lists all metrix available . Also some description about first subheading type.!! This tool displays a flat profile for the selected metrics and with the classification of regions. Similar functionality is provide by the tool Dump

Short call displays an overview across types of regions.

user@host:cube_regioninfo -m time,visits profile.cubex

done.

bl type time % visits % region

ANY 355599 100.00 664724749 100.00 (summary) ALL

MPI 0 0.00 0 0.00 (summary) MPI

USR 355599 100.00 664724749 100.00 (summary) USR

COM 0 0.00 0 0.00 (summary) COM

If one wants a detailed view of the flat profile, one uses -r command line option. Note that can provide list of regions for filtering (file filter.txt)

user@host:cube_regioninfo -l filter.txt -m time,visits -r profile.cubex

done.

bl type time % visits % region

USR 0 0.00 0 0.00 MEASUREMENT OFF

USR 0 0.00 0 0.00 TRACE BUFFER FLUSH

USR 0 0.00 0 0.00 THREADS

USR 0 0.00 0 0.00 MPI_Accumulate

USR 68 0.02 6912 0.00 MPI_Allgather

USR 0 0.00 0 0.00 MPI_Allgatherv

USR 10 0.00 256 0.00 MPI_Allreduce

USR 0 0.00 0 0.00 MPI_Alltoall

USR 0 0.00 0 0.00 MPI_Alltoallv

USR 0 0.00 0 0.00 MPI_Alltoallw

USR 0 0.00 0 0.00 MPI_Attr_delete

USR 0 0.00 0 0.00 MPI_Attr_get

USR 0 0.00 0 0.00 MPI_Attr_put

USR 2843 0.80 7168 0.00 MPI_Barrier

USR 6392 1.80 51072 0.01 MPI_Bcast

USR 0 0.00 0 0.00 MPI_Bsend

USR 0 0.00 0 0.00 MPI_Bsend_init

USR 0 0.00 0 0.00 MPI_Buffer_attach

USR 0 0.00 0 0.00 MPI_Buffer_detach

USR 0 0.00 0 0.00 MPI_Cancel

USR 0 0.00 0 0.00 MPI_Cart_coords

USR 0 0.00 0 0.00 MPI_Cart_create

USR 0 0.00 0 0.00 MPI_Cart_get

USR 0 0.00 0 0.00 MPI_Cart_map

USR 0 0.00 0 0.00 MPI_Cart_rank

USR 0 0.00 0 0.00 MPI_Cart_shift

USR 0 0.00 0 0.00 MPI_Cart_sub

USR 0 0.00 0 0.00 MPI_Cartdim_get

USR 0 0.00 0 0.00 MPI_Comm_call_errhandler

USR 0 0.00 0 0.00 MPI_Comm_compare

USR 113 0.03 1792 0.00 MPI_Comm_create

USR 0 0.00 0 0.00 MPI_Comm_create_errhandler

USR 0 0.00 0 0.00 MPI_Comm_create_group

USR 0 0.00 0 0.00 MPI_Comm_create_keyval

USR 0 0.00 0 0.00 MPI_Comm_delete_attr

USR 0 0.00 0 0.00 MPI_Comm_dup

USR 0 0.00 0 0.00 MPI_Comm_dup_with_info

USR 0 0.00 0 0.00 MPI_Comm_free

USR 0 0.00 0 0.00 MPI_Comm_free_keyval

USR 0 0.00 0 0.00 MPI_Comm_get_attr

USR 0 0.00 0 0.00 MPI_Comm_get_errhandler

USR 0 0.00 0 0.00 MPI_Comm_get_info

USR 0 0.00 0 0.00 MPI_Comm_get_name

USR 0 0.00 1024 0.00 MPI_Comm_group

USR 0 0.00 0 0.00 MPI_Comm_idup

USR 0 0.00 512 0.00 MPI_Comm_rank

USR 0 0.00 0 0.00 MPI_Comm_remote_group

USR 0 0.00 0 0.00 MPI_Comm_remote_size

USR 0 0.00 0 0.00 MPI_Comm_set_attr

USR 0 0.00 0 0.00 MPI_Comm_set_errhandler

USR 0 0.00 0 0.00 MPI_Comm_set_info

USR 0 0.00 0 0.00 MPI_Comm_set_name

USR 0 0.00 512 0.00 MPI_Comm_size

USR 55 0.02 256 0.00 MPI_Comm_split

USR 0 0.00 0 0.00 MPI_Comm_split_type

USR 0 0.00 0 0.00 MPI_Comm_test_inter

USR 0 0.00 0 0.00 MPI_Compare_and_swap

USR 0 0.00 0 0.00 MPI_Dims_create

USR 0 0.00 0 0.00 MPI_Dist_graph_create

USR 0 0.00 0 0.00 MPI_Dist_graph_create_adjacent

USR 0 0.00 0 0.00 MPI_Dist_graph_neighbors

USR 0 0.00 0 0.00 MPI_Dist_graph_neighbors_count

USR 0 0.00 0 0.00 MPI_Exscan

USR 0 0.00 0 0.00 MPI_Fetch_and_op

USR 0 0.00 0 0.00 MPI_File_c2f

USR 0 0.00 0 0.00 MPI_File_call_errhandler

USR 0 0.00 0 0.00 MPI_File_close

USR 0 0.00 0 0.00 MPI_File_create_errhandler

USR 0 0.00 0 0.00 MPI_File_delete

USR 0 0.00 0 0.00 MPI_File_f2c

USR 0 0.00 0 0.00 MPI_File_get_amode

USR 0 0.00 0 0.00 MPI_File_get_atomicity

USR 0 0.00 0 0.00 MPI_File_get_byte_offset

USR 0 0.00 0 0.00 MPI_File_get_errhandler

USR 0 0.00 0 0.00 MPI_File_get_group

USR 0 0.00 0 0.00 MPI_File_get_info

USR 0 0.00 0 0.00 MPI_File_get_position

USR 0 0.00 0 0.00 MPI_File_get_position_shared

USR 0 0.00 0 0.00 MPI_File_get_size

USR 0 0.00 0 0.00 MPI_File_get_type_extent

USR 0 0.00 0 0.00 MPI_File_get_view

USR 0 0.00 0 0.00 MPI_File_iread

USR 0 0.00 0 0.00 MPI_File_iread_all

USR 0 0.00 0 0.00 MPI_File_iread_at

USR 0 0.00 0 0.00 MPI_File_iread_at_all

USR 0 0.00 0 0.00 MPI_File_iread_shared

USR 0 0.00 0 0.00 MPI_File_iwrite

USR 0 0.00 0 0.00 MPI_File_iwrite_all

USR 0 0.00 0 0.00 MPI_File_iwrite_at

USR 0 0.00 0 0.00 MPI_File_iwrite_at_all

USR 0 0.00 0 0.00 MPI_File_iwrite_shared

USR 0 0.00 0 0.00 MPI_File_open

USR 0 0.00 0 0.00 MPI_File_preallocate

USR 0 0.00 0 0.00 MPI_File_read

USR 0 0.00 0 0.00 MPI_File_read_all

USR 0 0.00 0 0.00 MPI_File_read_all_begin

USR 0 0.00 0 0.00 MPI_File_read_all_end

USR 0 0.00 0 0.00 MPI_File_read_at

USR 0 0.00 0 0.00 MPI_File_read_at_all

USR 0 0.00 0 0.00 MPI_File_read_at_all_begin

USR 0 0.00 0 0.00 MPI_File_read_at_all_end

USR 0 0.00 0 0.00 MPI_File_read_ordered

USR 0 0.00 0 0.00 MPI_File_read_ordered_begin

USR 0 0.00 0 0.00 MPI_File_read_ordered_end

USR 0 0.00 0 0.00 MPI_File_read_shared

USR 0 0.00 0 0.00 MPI_File_seek

USR 0 0.00 0 0.00 MPI_File_seek_shared

USR 0 0.00 0 0.00 MPI_File_set_atomicity

USR 0 0.00 0 0.00 MPI_File_set_errhandler

USR 0 0.00 0 0.00 MPI_File_set_info

USR 0 0.00 0 0.00 MPI_File_set_size

USR 0 0.00 0 0.00 MPI_File_set_view

USR 0 0.00 0 0.00 MPI_File_sync

USR 0 0.00 0 0.00 MPI_File_write

USR 0 0.00 0 0.00 MPI_File_write_all

USR 0 0.00 0 0.00 MPI_File_write_all_begin

USR 0 0.00 0 0.00 MPI_File_write_all_end

USR 0 0.00 0 0.00 MPI_File_write_at

USR 0 0.00 0 0.00 MPI_File_write_at_all

USR 0 0.00 0 0.00 MPI_File_write_at_all_begin

USR 0 0.00 0 0.00 MPI_File_write_at_all_end

USR 0 0.00 0 0.00 MPI_File_write_ordered

USR 0 0.00 0 0.00 MPI_File_write_ordered_begin

USR 0 0.00 0 0.00 MPI_File_write_ordered_end

USR 0 0.00 0 0.00 MPI_File_write_shared

USR 349 0.10 256 0.00 MPI_Finalize

USR 0 0.00 0 0.00 MPI_Finalized

USR 0 0.00 25 0.00 MPI_Gather

USR 0 0.00 0 0.00 MPI_Gatherv

USR 0 0.00 0 0.00 MPI_Get

USR 0 0.00 0 0.00 MPI_Get_accumulate

USR 0 0.00 0 0.00 MPI_Get_library_version

USR 0 0.00 0 0.00 MPI_Graph_create

USR 0 0.00 0 0.00 MPI_Graph_get

USR 0 0.00 0 0.00 MPI_Graph_map

USR 0 0.00 0 0.00 MPI_Graph_neighbors

USR 0 0.00 0 0.00 MPI_Graph_neighbors_count

USR 0 0.00 0 0.00 MPI_Graphdims_get

USR 0 0.00 0 0.00 MPI_Group_compare

USR 0 0.00 0 0.00 MPI_Group_difference

USR 0 0.00 0 0.00 MPI_Group_excl

USR 0 0.00 0 0.00 MPI_Group_free

USR 0 0.00 1792 0.00 MPI_Group_incl

USR 0 0.00 0 0.00 MPI_Group_intersection

USR 0 0.00 0 0.00 MPI_Group_range_excl

USR 0 0.00 0 0.00 MPI_Group_range_incl

USR 0 0.00 0 0.00 MPI_Group_rank

USR 0 0.00 0 0.00 MPI_Group_size

USR 0 0.00 0 0.00 MPI_Group_translate_ranks

USR 0 0.00 0 0.00 MPI_Group_union

USR 0 0.00 0 0.00 MPI_Iallgather

USR 0 0.00 0 0.00 MPI_Iallgatherv

USR 0 0.00 0 0.00 MPI_Iallreduce

USR 0 0.00 0 0.00 MPI_Ialltoall

USR 0 0.00 0 0.00 MPI_Ialltoallv

USR 0 0.00 0 0.00 MPI_Ialltoallw

USR 0 0.00 0 0.00 MPI_Ibarrier

USR 0 0.00 0 0.00 MPI_Ibcast

USR 0 0.00 0 0.00 MPI_Ibsend

USR 0 0.00 0 0.00 MPI_Iexscan

USR 0 0.00 0 0.00 MPI_Igather

USR 0 0.00 0 0.00 MPI_Igatherv

USR 0 0.00 0 0.00 MPI_Improbe

USR 0 0.00 0 0.00 MPI_Imrecv

USR 0 0.00 0 0.00 MPI_Ineighbor_allgather

USR 0 0.00 0 0.00 MPI_Ineighbor_allgatherv

USR 0 0.00 0 0.00 MPI_Ineighbor_alltoall

USR 0 0.00 0 0.00 MPI_Ineighbor_alltoallv

USR 0 0.00 0 0.00 MPI_Ineighbor_alltoallw

USR 1411 0.40 256 0.00 MPI_Init

USR 0 0.00 0 0.00 MPI_Init_thread

USR 0 0.00 0 0.00 MPI_Initialized

USR 0 0.00 0 0.00 MPI_Intercomm_create

USR 0 0.00 0 0.00 MPI_Intercomm_merge

USR 0 0.00 0 0.00 MPI_Iprobe

USR 0 0.00 14337 0.00 MPI_Irecv

USR 0 0.00 0 0.00 MPI_Ireduce

USR 0 0.00 0 0.00 MPI_Ireduce_scatter

USR 0 0.00 0 0.00 MPI_Ireduce_scatter_block

USR 0 0.00 0 0.00 MPI_Irsend

USR 0 0.00 0 0.00 MPI_Is_thread_main

USR 0 0.00 0 0.00 MPI_Iscan

USR 0 0.00 0 0.00 MPI_Iscatter

USR 0 0.00 0 0.00 MPI_Iscatterv

USR 1 0.00 14337 0.00 MPI_Isend

USR 0 0.00 0 0.00 MPI_Issend

USR 0 0.00 0 0.00 MPI_Keyval_create

USR 0 0.00 0 0.00 MPI_Keyval_free

USR 0 0.00 0 0.00 MPI_Mprobe

USR 0 0.00 0 0.00 MPI_Mrecv

USR 0 0.00 0 0.00 MPI_Neighbor_allgather

USR 0 0.00 0 0.00 MPI_Neighbor_allgatherv

USR 0 0.00 0 0.00 MPI_Neighbor_alltoall

USR 0 0.00 0 0.00 MPI_Neighbor_alltoallv

USR 0 0.00 0 0.00 MPI_Neighbor_alltoallw

USR 0 0.00 0 0.00 MPI_Probe

USR 0 0.00 0 0.00 MPI_Put

USR 0 0.00 0 0.00 MPI_Query_thread

USR 0 0.00 0 0.00 MPI_Raccumulate

USR 302 0.08 24728 0.00 MPI_Recv

USR 0 0.00 0 0.00 MPI_Recv_init

USR 34384 9.67 6400 0.00 MPI_Reduce

USR 0 0.00 0 0.00 MPI_Reduce_local

USR 0 0.00 0 0.00 MPI_Reduce_scatter

USR 0 0.00 0 0.00 MPI_Reduce_scatter_block

USR 0 0.00 0 0.00 MPI_Register_datarep

USR 0 0.00 0 0.00 MPI_Request_free

USR 0 0.00 0 0.00 MPI_Rget

USR 0 0.00 0 0.00 MPI_Rget_accumulate

USR 0 0.00 0 0.00 MPI_Rput

USR 0 0.00 0 0.00 MPI_Rsend

USR 0 0.00 0 0.00 MPI_Rsend_init

USR 0 0.00 0 0.00 MPI_Scan

USR 0 0.00 0 0.00 MPI_Scatter

USR 0 0.00 0 0.00 MPI_Scatterv

USR 0 0.00 0 0.00 MPI_Send

USR 0 0.00 0 0.00 MPI_Send_init

USR 0 0.00 0 0.00 MPI_Sendrecv

USR 0 0.00 0 0.00 MPI_Sendrecv_replace

USR 4695 1.32 24728 0.00 MPI_Ssend

USR 0 0.00 0 0.00 MPI_Ssend_init

USR 0 0.00 0 0.00 MPI_Start

USR 0 0.00 0 0.00 MPI_Startall

USR 0 0.00 0 0.00 MPI_Test

USR 0 0.00 0 0.00 MPI_Test_cancelled

USR 0 0.00 0 0.00 MPI_Testall

USR 0 0.00 0 0.00 MPI_Testany

USR 0 0.00 0 0.00 MPI_Testsome

USR 0 0.00 0 0.00 MPI_Topo_test

USR 0 0.00 0 0.00 MPI_Wait

USR 0 0.00 2982 0.00 MPI_Waitall

USR 0 0.00 0 0.00 MPI_Waitany

USR 0 0.00 0 0.00 MPI_Waitsome

USR 0 0.00 0 0.00 MPI_Win_allocate

USR 0 0.00 0 0.00 MPI_Win_allocate_shared

USR 0 0.00 0 0.00 MPI_Win_attach

USR 0 0.00 0 0.00 MPI_Win_call_errhandler

USR 0 0.00 0 0.00 MPI_Win_complete

USR 0 0.00 0 0.00 MPI_Win_create

USR 0 0.00 0 0.00 MPI_Win_create_dynamic

USR 0 0.00 0 0.00 MPI_Win_create_errhandler

USR 0 0.00 0 0.00 MPI_Win_create_keyval

USR 0 0.00 0 0.00 MPI_Win_delete_attr

USR 0 0.00 0 0.00 MPI_Win_detach

USR 0 0.00 0 0.00 MPI_Win_fence

USR 0 0.00 0 0.00 MPI_Win_flush

USR 0 0.00 0 0.00 MPI_Win_flush_all

USR 0 0.00 0 0.00 MPI_Win_flush_local

USR 0 0.00 0 0.00 MPI_Win_flush_local_all

USR 0 0.00 0 0.00 MPI_Win_free

USR 0 0.00 0 0.00 MPI_Win_free_keyval

USR 0 0.00 0 0.00 MPI_Win_get_attr

USR 0 0.00 0 0.00 MPI_Win_get_errhandler

USR 0 0.00 0 0.00 MPI_Win_get_group

USR 0 0.00 0 0.00 MPI_Win_get_info

USR 0 0.00 0 0.00 MPI_Win_get_name

USR 0 0.00 0 0.00 MPI_Win_lock

USR 0 0.00 0 0.00 MPI_Win_lock_all

USR 0 0.00 0 0.00 MPI_Win_post

USR 0 0.00 0 0.00 MPI_Win_set_attr

USR 0 0.00 0 0.00 MPI_Win_set_errhandler

USR 0 0.00 0 0.00 MPI_Win_set_info

* USR 0 0.00 0 0.00 MPI_Win_set_name

* USR 0 0.00 0 0.00 MPI_Win_shared_query

* USR 0 0.00 0 0.00 MPI_Win_start

* USR 0 0.00 0 0.00 MPI_Win_sync

* USR 0 0.00 0 0.00 MPI_Win_test

* USR 0 0.00 0 0.00 MPI_Win_unlock

* USR 0 0.00 0 0.00 MPI_Win_unlock_all

* USR 0 0.00 0 0.00 MPI_Win_wait

* USR 0 0.00 0 0.00 PARALLEL

* USR 155 0.04 256 0.00 MAIN__

* USR 0 0.00 256 0.00 get_nnpsmp_

* USR 1 0.00 126669 0.02 run_control.check_alloc_

* USR 8 0.00 256 0.00 get_smp_list_

* USR 0 0.00 256 0.00 set_shm_numablock

* USR 401 0.11 256 0.00 monte_carlo_

* USR 0 0.00 256 0.00 monte_carlo_IP_set_defaults_

* USR 124 0.03 256 0.00 monte_carlo_IP_set_input_parameters_

* USR 10 0.00 256 0.00 monte_carlo_IP_read_particles_

* USR 3 0.00 256 0.00 monte_carlo_IP_read_custom_spindep_

* USR 6 0.00 256 0.00 monte_carlo_IP_assign_spin_deps_

* USR 2 0.00 256 0.00 monte_carlo_IP_check_input_parameters_

* USR 0 0.00 256 0.00 monte_carlo_IP_input_setup_

* USR 0 0.00 1 0.00 monte_carlo_IP_print_input_parameters_

* USR 0 0.00 62 0.00 monte_carlo_IP_wout_iparam_

* USR 0 0.00 1 0.00 monte_carlo_IP_print_particles_

* USR 107 0.03 256 0.00 monte_carlo_IP_read_wave_function_

* USR 2 0.00 256 0.00 alloc_shm_

* USR 0 0.00 256 0.00 alloc_shm_sysv

* USR 0 0.00 256 0.00 monte_carlo_IP_flag_missing_dets_

* USR 0 0.00 1 0.00 monte_carlo_IP_check_wave_function_

* USR 36 0.01 256 0.00 monte_carlo_IP_calculate_geometry_

* USR 27 0.01 256 0.00 monte_carlo_IP_read_pseudopotentials_

* USR 0 0.00 256 0.00 monte_carlo_IP_geometry_printout_

* USR 1 0.00 256 0.00 monte_carlo_IP_orbital_setup_

* USR 0 0.00 256 0.00 monte_carlo_IP_check_orbital_derivatives_

* USR 0 0.00 256 0.00 monte_carlo_IP_setup_expectation_values_

* USR 0 0.00 256 0.00 monte_carlo_IP_setup_interactions_

* USR 0 0.00 256 0.00 monte_carlo_IP_check_nn_

* USR 98 0.03 256 0.00 monte_carlo_IP_read_jastrow_function_

* USR 0 0.00 256 0.00 monte_carlo_IP_read_backflow_

* USR 8 0.00 256 0.00 monte_carlo_IP_init_vcpp_

* USR 0 0.00 256 0.00 monte_carlo_IP_print_part_title_

* USR 281 0.08 256 0.00 monte_carlo_IP_run_dmc_

* USR 11021 3.10 25041 0.00 dmcdmc_main.move_config_

USR 2434 0.68 45059225 6.78 wfn_utils.wfn_loggrad_

USR 236521 66.51 175964383 26.47 pjastrow.oneelec_jastrow_

USR 9886 2.78 144014658 21.67 wfn_utils.wfn_ratio_

USR 15497 4.36 144014658 21.67 slater.wfn_ratio_slater_

USR 15512 4.36 144014658 21.67 scratch.get_eevecs1_ch_

USR 9356 2.63 25041 0.00 energy_utils.eval_local_energy_

USR 2967 0.83 11268450 1.70 non_local.calc_nl_projection_

USR 7 0.00 6400 0.00 parallel.qmpi_reduce_d1_

USR 0 0.00 6400 0.00 parallel.qmpi_bcast_d1_

USR 0 0.00 12800 0.00 parallel.qmpi_bcast_d_

USR 16 0.00 9382 0.00 dmc.branch_and_redist_

USR 39 0.01 6144 0.00 dmc.branch_and_redist_send_

USR 1 0.00 2982 0.00 dmc.branch_and_redist_recv_

USR 4 0.00 256 0.00 dmc.branch_and_redist_sendrecv_

USR 0 0.00 256 0.00 dealloc_shm_

USR 445 0.13 256 0.00 dealloc_shm_sysv

USR 0 0.00 256 0.00 clean_shm_

ANY 355599 100.00 664724749 100.00 (summary) ALL

MPI 0 0.00 0 0.00 (summary) MPI

USR 355599 100.00 664724749 100.00 (summary) USR

COM 0 0.00 0 0.00 (summary) COM

BL 12291 3.46 159199 0.02 (summary) BL

ANY 343308 96.54 664565550 99.98 (summary) ALL-BL

MPI 0 0.00 0 0.00 (summary) MPI-BL

USR 343308 96.54 664565550 99.98 (summary) USR-BL

COM 0 0.00 0 0.00 (summary) COM-BL

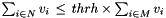

!!TODO what is thrh? example usage of -a met,thrh, -r met,thrh, -x met,thrh !! Another tool which allows to examine performance data along the call tree with ability to filter accordingly to user defined criteria. Partially same functionality is implemented by Dump and call tree.

user@host: cube_info -t -m bytes_sent profile.cubex | Time | bytes_sent | Diff-Calltree | 355598 | 14204637004 | * MAIN__ | 1411 | 0 | | * MPI_Init | 0 | 0 | | * MPI_Comm_size | 0 | 0 | | * MPI_Comm_rank | 0 | 0 | | * get_nnpsmp_ | 0 | 0 | | * run_control.check_alloc_ | 69 | 917504 | | * get_smp_list_ | 0 | 0 | | | * MPI_Comm_rank | 0 | 0 | | | * MPI_Comm_size | 9 | 262144 | | | * MPI_Allreduce | 0 | 0 | | | * set_shm_numablock | 27 | 655360 | | | * MPI_Allgather | 0 | 0 | | | * MPI_Comm_group | 0 | 0 | | | * MPI_Group_incl | 25 | 0 | | | * MPI_Comm_create | 0 | 0 | | * MPI_Comm_group | 0 | 0 | | * MPI_Group_incl | 24 | 0 | | * MPI_Comm_create | 353588 | 14203719500 | | * monte_carlo_ | 0 | 0 | | | * monte_carlo_IP_set_defaults_ | 142 | 0 | | | * monte_carlo_IP_set_input_parameters_ | 0 | 0 | | | | * run_control.check_alloc_ | 10 | 0 | | | | * monte_carlo_IP_read_particles_ | 0 | 0 | | | | | * run_control.check_alloc_ | 3 | 0 | | | | * monte_carlo_IP_read_custom_spindep_ | 5 | 0 | | | | * monte_carlo_IP_assign_spin_deps_ | 0 | 0 | | | | | * run_control.check_alloc_ | 174 | 1024 | | | * monte_carlo_IP_check_input_parameters_ | 172 | 1024 | | | | * MPI_Bcast | 0 | 0 | | | * monte_carlo_IP_input_setup_ | 0 | 0 | | | * monte_carlo_IP_print_input_parameters_ | 0 | 0 | | | | * monte_carlo_IP_wout_iparam_ | 0 | 0 | | | * monte_carlo_IP_print_particles_ | 68 | 12288 | | | * MPI_Bcast | 0 | 0 | | | * run_control.check_alloc_ | 0 | 0 | | | * MPI_Comm_group | 0 | 0 | | | * MPI_Group_incl | 23 | 0 | | | * MPI_Comm_create | 8941 | 12811606784 | | | * monte_carlo_IP_read_wave_function_ | 2792 | 0 | | | | * MPI_Barrier | 55 | 0 | | | | * MPI_Comm_split | 5980 | 12811605760 | | | | * MPI_Bcast | 0 | 0 | | | | * run_control.check_alloc_ | 5 | 1024 | | | | * alloc_shm_ | 4 | 1024 | | | | | * alloc_shm_sysv | 3 | 1024 | | | | | | * MPI_Bcast | 0 | 0 | | | | | | * MPI_Barrier | 0 | 0 | | | | * monte_carlo_IP_flag_missing_dets_ | 0 | 0 | | | | | * run_control.check_alloc_ | 0 | 0 | | | * monte_carlo_IP_check_wave_function_ | 36 | 0 | | | * monte_carlo_IP_calculate_geometry_ | 0 | 0 | | | | * run_control.check_alloc_ | 27 | 0 | | | * monte_carlo_IP_read_pseudopotentials_ | 0 | 0 | | | | * run_control.check_alloc_ | 0 | 0 | | | * monte_carlo_IP_geometry_printout_ | 0 | 0 | | | | * run_control.check_alloc_ | 0 | 0 | | | * monte_carlo_IP_orbital_setup_ | 0 | 0 | | | | * run_control.check_alloc_ | 0 | 0 | | | * monte_carlo_IP_check_orbital_derivatives_ | 0 | 0 | | | * monte_carlo_IP_setup_expectation_values_ | 0 | 0 | | | * monte_carlo_IP_setup_interactions_ | 0 | 0 | | | | * run_control.check_alloc_ | 46 | 2048 | | | * monte_carlo_IP_check_nn_ | 0 | 0 | | | | * run_control.check_alloc_ | 46 | 2048 | | | | * MPI_Bcast | 97 | 0 | | | * monte_carlo_IP_read_jastrow_function_ | 0 | 0 | | | | * run_control.check_alloc_ | 0 | 0 | | | * monte_carlo_IP_read_backflow_ | 7 | 0 | | | * monte_carlo_IP_init_vcpp_ | 0 | 0 | | | * monte_carlo_IP_print_part_title_ | 343175 | 1392097356 | | | * monte_carlo_IP_run_dmc_ | 0 | 0 | | | | * run_control.check_alloc_ | 0 | 0 | | | | * MPI_Comm_group | 0 | 0 | | | | * MPI_Group_incl | 38 | 0 | | | | * MPI_Comm_create | 64 | 747520 | | | | * MPI_Bcast | 4657 | 11140276 | | | | * MPI_Ssend | 303193 | 0 | | | | * dmcdmc_main.move_config_ | 0 | 0 | | | | | * run_control.check_alloc_ | 21668 | 0 | | | | | * wfn_utils.wfn_loggrad_ | 19722 | 0 | | | | | | * pjastrow.oneelec_jastrow_ | 28878 | 0 | | | | | * wfn_utils.wfn_ratio_ | 6907 | 0 | | | | | | * slater.wfn_ratio_slater_ | 1259 | 0 | | | | | | * scratch.get_eevecs1_ch_ | 19721 | 0 | | | | | | * pjastrow.oneelec_jastrow_ | 241625 | 0 | | | | | * energy_utils.eval_local_energy_ | 20785 | 0 | | | | | | * wfn_utils.wfn_loggrad_ | 20298 | 0 | | | | | | | * pjastrow.oneelec_jastrow_ | 211483 | 0 | | | | | | * non_local.calc_nl_projection_ | 208516 | 0 | | | | | | | * wfn_utils.wfn_ratio_ | 8588 | 0 | | | | | | | | * slater.wfn_ratio_slater_ | 176779 | 0 | | | | | | | | * pjastrow.oneelec_jastrow_ | 14252 | 0 | | | | | | | | * scratch.get_eevecs1_ch_ | 34390 | 819200 | | | | * parallel.qmpi_reduce_d1_ | 34384 | 819200 | | | | | * MPI_Reduce | 56 | 0 | | | | * MPI_Recv | 0 | 800 | | | | * MPI_Gather | 21 | 102400 | | | | * parallel.qmpi_bcast_d1_ | 21 | 102400 | | | | | * MPI_Bcast | 34 | 102400 | | | | * parallel.qmpi_bcast_d_ | 34 | 102400 | | | | | * MPI_Bcast | 429 | 1378922616 | | | | * dmc.branch_and_redist_ | 379 | 1319421620 | | | | | * dmc.branch_and_redist_send_ | 0 | 0 | | | | | | * run_control.check_alloc_ | 25 | 6291456 | | | | | | * MPI_Allgather | 230 | 0 | | | | | | * MPI_Recv | 33 | 152036 | | | | | | * MPI_Ssend | 50 | 0 | | | | | | * MPI_Barrier | 0 | 1312978128 | | | | | | * MPI_Isend | 0 | 0 | | | | | | * MPI_Irecv | 1 | 0 | | | | | * dmc.branch_and_redist_recv_ | 0 | 0 | | | | | | * MPI_Waitall | 0 | 0 | | | | | | * run_control.check_alloc_ | 32 | 59500996 | | | | | * dmc.branch_and_redist_sendrecv_ | 0 | 0 | | | | | | * run_control.check_alloc_ | 8 | 262144 | | | | | | * MPI_Allgather | 14 | 0 | | | | | | * MPI_Recv | 4 | 59238852 | | | | | | * MPI_Ssend | 6 | 262144 | | | | * MPI_Allgather | 444 | 0 | | | * dealloc_shm_ | 444 | 0 | | | | * dealloc_shm_sysv | 0 | 0 | | * clean_shm_ | 348 | 0 | | * MPI_Finalize

user@host: cube_info -w scout.cubex | Visitors | Diff-Calltree | 8 | * MAIN__ | 8 | | * mpi_setup_ | 8 | | | * MPI_Init_thread | 8 | | | * MPI_Comm_size | 8 | | | * MPI_Comm_rank | 8 | | | * MPI_Comm_split | 8 | | * MPI_Bcast | 8 | | * env_setup_ | 8 | | | * MPI_Bcast | 8 | | * zone_setup_ | 8 | | * map_zones_ | 8 | | | * get_comm_index_ | 8 | | * zone_starts_ | 8 | | * set_constants_ | 8 | | * initialize_ | 24 | | | * !$omp parallel @initialize.f:28 | 24 | | | | * !$omp do @initialize.f:31 | 24 | | | | * !$omp do @initialize.f:50 | 24 | | | | * !$omp do @initialize.f:100 | 24 | | | | * !$omp do @initialize.f:119 | 24 | | | | * !$omp do @initialize.f:137 | 24 | | | | * !$omp do @initialize.f:156 | 24 | | | | | * !$omp implicit barrier @initialize.f:167 | 24 | | | | * !$omp do @initialize.f:174 | 24 | | | | * !$omp do @initialize.f:192 | 24 | | | | * !$omp implicit barrier @initialize.f:204 | 8 | | * exact_rhs_ | 24 | | | * !$omp parallel @exact_rhs.f:21 | 24 | | | | * !$omp do @exact_rhs.f:31 | 24 | | | | | * !$omp implicit barrier @exact_rhs.f:41 | 24 | | | | * !$omp do @exact_rhs.f:46 | 24 | | | | * !$omp do @exact_rhs.f:147 | 24 | | | | | * !$omp implicit barrier @exact_rhs.f:242 | 24 | | | | * !$omp do @exact_rhs.f:247 | 24 | | | | | * !$omp implicit barrier @exact_rhs.f:341 | 24 | | | | * !$omp do @exact_rhs.f:346 | 24 | | | | * !$omp implicit barrier @exact_rhs.f:357 | 8 | | * exch_qbc_ | 8 | | | * copy_x_face_ | 24 | | | | * !$omp parallel @exch_qbc.f:255 | 24 | | | | | * !$omp do @exch_qbc.f:255 | 24 | | | | | | * !$omp implicit barrier @exch_qbc.f:264 | 24 | | | | * !$omp parallel @exch_qbc.f:244 | 24 | | | | | * !$omp do @exch_qbc.f:244 | 24 | | | | | | * !$omp implicit barrier @exch_qbc.f:253 | 8 | | | * copy_y_face_ | 24 | | | | * !$omp parallel @exch_qbc.f:215 | 24 | | | | | * !$omp do @exch_qbc.f:215 | 24 | | | | | | * !$omp implicit barrier @exch_qbc.f:224 | 24 | | | | * !$omp parallel @exch_qbc.f:204 | 24 | | | | | * !$omp do @exch_qbc.f:204 | 24 | | | | | | * !$omp implicit barrier @exch_qbc.f:213 | 8 | | | * MPI_Isend | 8 | | | * MPI_Irecv | 8 | | | * MPI_Waitall | 8 | | * adi_ | 8 | | | * compute_rhs_ | 24 | | | | * !$omp parallel @rhs.f:28 | 24 | | | | | * !$omp do @rhs.f:37 | 24 | | | | | * !$omp do @rhs.f:62 | 24 | | | | | | * !$omp implicit barrier @rhs.f:72 | 8 | | | | | * !$omp master @rhs.f:74 | 24 | | | | | * !$omp do @rhs.f:80 | 8 | | | | | * !$omp master @rhs.f:183 | 24 | | | | | * !$omp do @rhs.f:191 | 8 | | | | | * !$omp master @rhs.f:293 | 24 | | | | | * !$omp do @rhs.f:301 | 24 | | | | | | * !$omp implicit barrier @rhs.f:353 | 24 | | | | | * !$omp do @rhs.f:359 | 24 | | | | | * !$omp do @rhs.f:372 | 24 | | | | | * !$omp do @rhs.f:384 | 24 | | | | | * !$omp do @rhs.f:400 | 24 | | | | | * !$omp do @rhs.f:413 | 24 | | | | | | * !$omp implicit barrier @rhs.f:423 | 8 | | | | | * !$omp master @rhs.f:424 | 24 | | | | | * !$omp do @rhs.f:428 | 24 | | | | | * !$omp implicit barrier @rhs.f:439 | 8 | | | * x_solve_ | 24 | | | | * !$omp parallel @x_solve.f:46 | 24 | | | | | * !$omp do @x_solve.f:54 | 24 | | | | | * !$omp implicit barrier @x_solve.f:407 | 8 | | | * y_solve_ | 24 | | | | * !$omp parallel @y_solve.f:43 | 24 | | | | | * !$omp do @y_solve.f:52 | 24 | | | | | * !$omp implicit barrier @y_solve.f:406 | 8 | | | * z_solve_ | 24 | | | | * !$omp parallel @z_solve.f:43 | 24 | | | | | * !$omp do @z_solve.f:52 | 24 | | | | | * !$omp implicit barrier @z_solve.f:428 | 8 | | | * add_ | 24 | | | | * !$omp parallel @add.f:22 | 24 | | | | | * !$omp do @add.f:22 | 24 | | | | | | * !$omp implicit barrier @add.f:33 | 8 | | * MPI_Barrier | 8 | | * verify_ | 8 | | | * error_norm_ | 24 | | | | * !$omp parallel @error.f:27 | 24 | | | | | * !$omp do @error.f:33 | 24 | | | | | * !$omp atomic @error.f:51 | 24 | | | | | * !$omp implicit barrier @error.f:54 | 8 | | | * compute_rhs_ | 24 | | | | * !$omp parallel @rhs.f:28 | 24 | | | | | * !$omp do @rhs.f:37 | 24 | | | | | * !$omp do @rhs.f:62 | 24 | | | | | | * !$omp implicit barrier @rhs.f:72 | 8 | | | | | * !$omp master @rhs.f:74 | 24 | | | | | * !$omp do @rhs.f:80 | 8 | | | | | * !$omp master @rhs.f:183 | 24 | | | | | * !$omp do @rhs.f:191 | 8 | | | | | * !$omp master @rhs.f:293 | 24 | | | | | * !$omp do @rhs.f:301 | 24 | | | | | | * !$omp implicit barrier @rhs.f:353 | 24 | | | | | * !$omp do @rhs.f:359 | 24 | | | | | * !$omp do @rhs.f:372 | 24 | | | | | * !$omp do @rhs.f:384 | 24 | | | | | * !$omp do @rhs.f:400 | 24 | | | | | * !$omp do @rhs.f:413 | 24 | | | | | | * !$omp implicit barrier @rhs.f:423 | 8 | | | | | * !$omp master @rhs.f:424 | 24 | | | | | * !$omp do @rhs.f:428 | 24 | | | | | * !$omp implicit barrier @rhs.f:439 | 8 | | | * rhs_norm_ | 24 | | | | * !$omp parallel @error.f:86 | 24 | | | | | * !$omp do @error.f:91 | 24 | | | | | * !$omp atomic @error.f:104 | 24 | | | | | * !$omp implicit barrier @error.f:107 | 8 | | | * MPI_Reduce | 8 | | * MPI_Reduce | 1 | | * print_results_ | 8 | | * MPI_Finalize

user@host: cube_info -m delay_ompidle scout.cubex | OMP short-ter | Diff-Calltree | 2.9540 | * MAIN__ | 1.2127 | | * mpi_setup_ | 1.2108 | | | * MPI_Init_thread | 0.0000 | | | * MPI_Comm_size | 0.0000 | | | * MPI_Comm_rank | 0.0015 | | | * MPI_Comm_split | 0.0004 | | * MPI_Bcast | 0.0005 | | * env_setup_ | 0.0001 | | | * MPI_Bcast | 0.0001 | | * zone_setup_ | 0.0012 | | * map_zones_ | 0.0005 | | | * get_comm_index_ | 0.0001 | | * zone_starts_ | 0.0000 | | * set_constants_ | 0.0032 | | * initialize_ | 0.0024 | | | * !$omp parallel @initialize.f:28 | 0.0000 | | | | * !$omp do @initialize.f:31 | 0.0000 | | | | * !$omp do @initialize.f:50 | 0.0000 | | | | * !$omp do @initialize.f:100 | 0.0000 | | | | * !$omp do @initialize.f:119 | 0.0000 | | | | * !$omp do @initialize.f:137 | 0.0000 | | | | * !$omp do @initialize.f:156 | 0.0000 | | | | | * !$omp implicit barrier @initialize.f:167 | 0.0000 | | | | * !$omp do @initialize.f:174 | 0.0000 | | | | * !$omp do @initialize.f:192 | 0.0000 | | | | * !$omp implicit barrier @initialize.f:204 | 0.0013 | | * exact_rhs_ | 0.0012 | | | * !$omp parallel @exact_rhs.f:21 | 0.0000 | | | | * !$omp do @exact_rhs.f:31 | 0.0000 | | | | | * !$omp implicit barrier @exact_rhs.f:41 | 0.0000 | | | | * !$omp do @exact_rhs.f:46 | 0.0000 | | | | * !$omp do @exact_rhs.f:147 | 0.0000 | | | | | * !$omp implicit barrier @exact_rhs.f:242 | 0.0000 | | | | * !$omp do @exact_rhs.f:247 | 0.0000 | | | | | * !$omp implicit barrier @exact_rhs.f:341 | 0.0000 | | | | * !$omp do @exact_rhs.f:346 | 0.0000 | | | | * !$omp implicit barrier @exact_rhs.f:357 | 1.3201 | | * exch_qbc_ | 0.2226 | | | * copy_x_face_ | 0.0738 | | | | * !$omp parallel @exch_qbc.f:255 | 0.0141 | | | | | * !$omp do @exch_qbc.f:255 | 0.0000 | | | | | | * !$omp implicit barrier @exch_qbc.f:264 | 0.0730 | | | | * !$omp parallel @exch_qbc.f:244 | 0.0140 | | | | | * !$omp do @exch_qbc.f:244 | 0.0000 | | | | | | * !$omp implicit barrier @exch_qbc.f:253 | 0.2176 | | | * copy_y_face_ | 0.0722 | | | | * !$omp parallel @exch_qbc.f:215 | 0.0131 | | | | | * !$omp do @exch_qbc.f:215 | 0.0000 | | | | | | * !$omp implicit barrier @exch_qbc.f:224 | 0.0711 | | | | * !$omp parallel @exch_qbc.f:204 | 0.0130 | | | | | * !$omp do @exch_qbc.f:204 | 0.0000 | | | | | | * !$omp implicit barrier @exch_qbc.f:213 | 0.0861 | | | * MPI_Isend | 0.0652 | | | * MPI_Irecv | 0.6392 | | | * MPI_Waitall | 0.3930 | | * adi_ | 0.0670 | | | * compute_rhs_ | 0.0387 | | | | * !$omp parallel @rhs.f:28 | 0.0000 | | | | | * !$omp do @rhs.f:37 | 0.0000 | | | | | * !$omp do @rhs.f:62 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:72 | 0.0000 | | | | | * !$omp master @rhs.f:74 | 0.0000 | | | | | * !$omp do @rhs.f:80 | 0.0000 | | | | | * !$omp master @rhs.f:183 | 0.0000 | | | | | * !$omp do @rhs.f:191 | 0.0000 | | | | | * !$omp master @rhs.f:293 | 0.0000 | | | | | * !$omp do @rhs.f:301 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:353 | 0.0000 | | | | | * !$omp do @rhs.f:359 | 0.0000 | | | | | * !$omp do @rhs.f:372 | 0.0000 | | | | | * !$omp do @rhs.f:384 | 0.0000 | | | | | * !$omp do @rhs.f:400 | 0.0000 | | | | | * !$omp do @rhs.f:413 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:423 | 0.0000 | | | | | * !$omp master @rhs.f:424 | 0.0000 | | | | | * !$omp do @rhs.f:428 | 0.0000 | | | | | * !$omp implicit barrier @rhs.f:439 | 0.0633 | | | * x_solve_ | 0.0368 | | | | * !$omp parallel @x_solve.f:46 | 0.0000 | | | | | * !$omp do @x_solve.f:54 | 0.0000 | | | | | * !$omp implicit barrier @x_solve.f:407 | 0.0649 | | | * y_solve_ | 0.0376 | | | | * !$omp parallel @y_solve.f:43 | 0.0000 | | | | | * !$omp do @y_solve.f:52 | 0.0000 | | | | | * !$omp implicit barrier @y_solve.f:406 | 0.0677 | | | * z_solve_ | 0.0392 | | | | * !$omp parallel @z_solve.f:43 | 0.0000 | | | | | * !$omp do @z_solve.f:52 | 0.0000 | | | | | * !$omp implicit barrier @z_solve.f:428 | 0.0636 | | | * add_ | 0.0413 | | | | * !$omp parallel @add.f:22 | 0.0072 | | | | | * !$omp do @add.f:22 | 0.0000 | | | | | | * !$omp implicit barrier @add.f:33 | 0.0005 | | * MPI_Barrier | 0.0026 | | * verify_ | 0.0003 | | | * error_norm_ | 0.0002 | | | | * !$omp parallel @error.f:27 | 0.0000 | | | | | * !$omp do @error.f:33 | 0.0000 | | | | | * !$omp atomic @error.f:51 | 0.0000 | | | | | * !$omp implicit barrier @error.f:54 | 0.0003 | | | * compute_rhs_ | 0.0002 | | | | * !$omp parallel @rhs.f:28 | 0.0000 | | | | | * !$omp do @rhs.f:37 | 0.0000 | | | | | * !$omp do @rhs.f:62 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:72 | 0.0000 | | | | | * !$omp master @rhs.f:74 | 0.0000 | | | | | * !$omp do @rhs.f:80 | 0.0000 | | | | | * !$omp master @rhs.f:183 | 0.0000 | | | | | * !$omp do @rhs.f:191 | 0.0000 | | | | | * !$omp master @rhs.f:293 | 0.0000 | | | | | * !$omp do @rhs.f:301 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:353 | 0.0000 | | | | | * !$omp do @rhs.f:359 | 0.0000 | | | | | * !$omp do @rhs.f:372 | 0.0000 | | | | | * !$omp do @rhs.f:384 | 0.0000 | | | | | * !$omp do @rhs.f:400 | 0.0000 | | | | | * !$omp do @rhs.f:413 | 0.0000 | | | | | | * !$omp implicit barrier @rhs.f:423 | 0.0000 | | | | | * !$omp master @rhs.f:424 | 0.0000 | | | | | * !$omp do @rhs.f:428 | 0.0000 | | | | | * !$omp implicit barrier @rhs.f:439 | 0.0003 | | | * rhs_norm_ | 0.0002 | | | | * !$omp parallel @error.f:86 | 0.0000 | | | | | * !$omp do @error.f:91 | 0.0000 | | | | | * !$omp atomic @error.f:104 | 0.0000 | | | | | * !$omp implicit barrier @error.f:107 | 0.0009 | | | * MPI_Reduce | 0.0001 | | * MPI_Reduce | 0.0001 | | * print_results_ | 0.0005 | | * MPI_Finalize

user@host: cube_info -l scout.cubex time visits mpi_init_completion mpi_finalize_wait mpi_barrier_wait mpi_barrier_completion mpi_latesender mpi_latesender_wo mpi_lswo_different mpi_lswo_same mpi_latereceiver mpi_earlyreduce mpi_earlyscan mpi_latebroadcast mpi_wait_nxn mpi_nxn_completion omp_management omp_fork omp_ebarrier_wait omp_ibarrier_wait omp_lock_contention_critical omp_lock_contention_api pthread_lock_contention_mutex_lock pthread_lock_contention_conditional syncs_send mpi_slr_count syncs_recv mpi_sls_count mpi_slswo_count syncs_coll comms_send mpi_clr_count comms_recv mpi_cls_count mpi_clswo_count comms_cxch comms_csrc comms_cdst bytes_sent bytes_rcvd bytes_cout bytes_cin bytes_put bytes_get mpi_rma_wait_at_create mpi_rma_wait_at_free mpi_rma_sync_late_post mpi_rma_early_wait mpi_rma_late_complete mpi_rma_wait_at_fence mpi_rma_early_fence mpi_rma_sync_lock_competition mpi_rma_sync_wait_for_progress mpi_rma_comm_late_post mpi_rma_comm_lock_competition mpi_rma_comm_wait_for_progress mpi_rma_pairsync_count mpi_rma_pairsync_unneeded_count critical_path critical_path_activities critical_imbalance_impact inter_partition_imbalance non_critical_path_activities delay_latesender delay_latesender_longterm delay_latereceiver delay_latereceiver_longterm delay_barrier delay_barrier_longterm delay_n2n delay_n2n_longterm delay_12n delay_12n_longterm delay_ompbarrier delay_ompbarrier_longterm delay_ompidle delay_ompidle_longterm mpi_wait_indirect_latesender mpi_wait_indirect_latereceiver mpi_wait_propagating_ls mpi_wait_propagating_lr

user@host: cube_info -b scout.cubex Number of nodes : 1 Processes per node : 0 Number of processes: 8 Wallclock time : 37.8293

met must have the following form: unique_metric_name:[incl|excl]:process_number.thread_number It is allowed to omit anything except for the metric's unique name, so "time" would compute the inclusive time aggregated over all threads or "time:excl:2" would compute the exclusive time aggregated over all threads that belong to the process with the id 2.

user@host: cube_info -m time:excl:2 scout.cubex | Time (E,2) | Diff-Calltree | 0.0009 | * MAIN__ | 0.0000 | | * mpi_setup_ | 0.0756 | | | * MPI_Init_thread | 0.0000 | | | * MPI_Comm_size | 0.0000 | | | * MPI_Comm_rank | 0.0002 | | | * MPI_Comm_split | 0.0003 | | * MPI_Bcast | 0.0000 | | * env_setup_ | 0.0001 | | | * MPI_Bcast | 0.0000 | | * zone_setup_ | 0.0000 | | * map_zones_ | 0.0000 | | | * get_comm_index_ | 0.0000 | | * zone_starts_ | 0.0000 | | * set_constants_ | 0.0001 | | * initialize_ | 0.0396 | | | * !$omp parallel @initialize.f:28 | 0.0026 | | | | * !$omp do @initialize.f:31 | 0.0333 | | | | * !$omp do @initialize.f:50 | 0.0002 | | | | * !$omp do @initialize.f:100 | 0.0002 | | | | * !$omp do @initialize.f:119 | 0.0002 | | | | * !$omp do @initialize.f:137 | 0.0002 | | | | * !$omp do @initialize.f:156 | 0.0380 | | | | | * !$omp implicit barrier @initialize.f:167 | 0.0004 | | | | * !$omp do @initialize.f:174 | 0.0003 | | | | * !$omp do @initialize.f:192 | 0.0011 | | | | * !$omp implicit barrier @initialize.f:204 | 0.0000 | | * exact_rhs_ | 0.0031 | | | * !$omp parallel @exact_rhs.f:21 | 0.0017 | | | | * !$omp do @exact_rhs.f:31 | 0.0108 | | | | | * !$omp implicit barrier @exact_rhs.f:41 | 0.0046 | | | | * !$omp do @exact_rhs.f:46 | 0.0050 | | | | * !$omp do @exact_rhs.f:147 | 0.0152 | | | | | * !$omp implicit barrier @exact_rhs.f:242 | 0.0053 | | | | * !$omp do @exact_rhs.f:247 | 0.0060 | | | | | * !$omp implicit barrier @exact_rhs.f:341 | 0.0004 | | | | * !$omp do @exact_rhs.f:346 | 0.0006 | | | | * !$omp implicit barrier @exact_rhs.f:357 | 0.0059 | | * exch_qbc_ | 0.0047 | | | * copy_x_face_ | 0.2299 | | | | * !$omp parallel @exch_qbc.f:255 | 0.0243 | | | | | * !$omp do @exch_qbc.f:255 | 0.1253 | | | | | | * !$omp implicit barrier @exch_qbc.f:264 | 0.2267 | | | | * !$omp parallel @exch_qbc.f:244 | 0.0214 | | | | | * !$omp do @exch_qbc.f:244 | 0.1223 | | | | | | * !$omp implicit barrier @exch_qbc.f:253 | 0.0046 | | | * copy_y_face_ | 0.2338 | | | | * !$omp parallel @exch_qbc.f:215 | 0.0282 | | | | | * !$omp do @exch_qbc.f:215 | 0.1278 | | | | | | * !$omp implicit barrier @exch_qbc.f:224 | 0.2402 | | | | * !$omp parallel @exch_qbc.f:204 | 0.0358 | | | | | * !$omp do @exch_qbc.f:204 | 0.1368 | | | | | | * !$omp implicit barrier @exch_qbc.f:213 | 0.0061 | | | * MPI_Isend | 0.0038 | | | * MPI_Irecv | 0.1087 | | | * MPI_Waitall | 0.0041 | | * adi_ | 0.0017 | | | * compute_rhs_ | 0.7270 | | | | * !$omp parallel @rhs.f:28 | 0.3947 | | | | | * !$omp do @rhs.f:37 | 0.2095 | | | | | * !$omp do @rhs.f:62 | 2.0865 | | | | | | * !$omp implicit barrier @rhs.f:72 | 0.0009 | | | | | * !$omp master @rhs.f:74 | 0.4947 | | | | | * !$omp do @rhs.f:80 | 0.0008 | | | | | * !$omp master @rhs.f:183 | 0.5565 | | | | | * !$omp do @rhs.f:191 | 0.0008 | | | | | * !$omp master @rhs.f:293 | 0.3326 | | | | | * !$omp do @rhs.f:301 | 1.7051 | | | | | | * !$omp implicit barrier @rhs.f:353 | 0.0158 | | | | | * !$omp do @rhs.f:359 | 0.0165 | | | | | * !$omp do @rhs.f:372 | 0.1812 | | | | | * !$omp do @rhs.f:384 | 0.0170 | | | | | * !$omp do @rhs.f:400 | 0.0153 | | | | | * !$omp do @rhs.f:413 | 0.3934 | | | | | | * !$omp implicit barrier @rhs.f:423 | 0.0007 | | | | | * !$omp master @rhs.f:424 | 0.0595 | | | | | * !$omp do @rhs.f:428 | 0.1270 | | | | | * !$omp implicit barrier @rhs.f:439 | 0.0016 | | | * x_solve_ | 6.1878 | | | | * !$omp parallel @x_solve.f:46 | 6.0586 | | | | | * !$omp do @x_solve.f:54 | 6.1324 | | | | | * !$omp implicit barrier @x_solve.f:407 | 0.0017 | | | * y_solve_ | 6.3199 | | | | * !$omp parallel @y_solve.f:43 | 6.2025 | | | | | * !$omp do @y_solve.f:52 | 6.2892 | | | | | * !$omp implicit barrier @y_solve.f:406 | 0.0018 | | | * z_solve_ | 6.8911 | | | | * !$omp parallel @z_solve.f:43 | 6.9750 | | | | | * !$omp do @z_solve.f:52 | 7.2641 | | | | | * !$omp implicit barrier @z_solve.f:428 | 0.0014 | | | * add_ | 0.2327 | | | | * !$omp parallel @add.f:22 | 0.1149 | | | | | * !$omp do @add.f:22 | 0.1817 | | | | | | * !$omp implicit barrier @add.f:33 | 0.0055 | | * MPI_Barrier | 0.0001 | | * verify_ | 0.0000 | | | * error_norm_ | 0.0038 | | | | * !$omp parallel @error.f:27 | 0.0033 | | | | | * !$omp do @error.f:33 | 0.0000 | | | | | * !$omp atomic @error.f:51 | 0.0038 | | | | | * !$omp implicit barrier @error.f:54 | 0.0000 | | | * compute_rhs_ | 0.0036 | | | | * !$omp parallel @rhs.f:28 | 0.0019 | | | | | * !$omp do @rhs.f:37 | 0.0011 | | | | | * !$omp do @rhs.f:62 | 0.0105 | | | | | | * !$omp implicit barrier @rhs.f:72 | 0.0000 | | | | | * !$omp master @rhs.f:74 | 0.0025 | | | | | * !$omp do @rhs.f:80 | 0.0000 | | | | | * !$omp master @rhs.f:183 | 0.0028 | | | | | * !$omp do @rhs.f:191 | 0.0000 | | | | | * !$omp master @rhs.f:293 | 0.0017 | | | | | * !$omp do @rhs.f:301 | 0.0087 | | | | | | * !$omp implicit barrier @rhs.f:353 | 0.0001 | | | | | * !$omp do @rhs.f:359 | 0.0001 | | | | | * !$omp do @rhs.f:372 | 0.0009 | | | | | * !$omp do @rhs.f:384 | 0.0001 | | | | | * !$omp do @rhs.f:400 | 0.0001 | | | | | * !$omp do @rhs.f:413 | 0.0020 | | | | | | * !$omp implicit barrier @rhs.f:423 | 0.0000 | | | | | * !$omp master @rhs.f:424 | 0.0003 | | | | | * !$omp do @rhs.f:428 | 0.0006 | | | | | * !$omp implicit barrier @rhs.f:439 | 0.0000 | | | * rhs_norm_ | 0.0011 | | | | * !$omp parallel @error.f:86 | 0.0004 | | | | | * !$omp do @error.f:91 | 0.0000 | | | | | * !$omp atomic @error.f:104 | 0.0007 | | | | | * !$omp implicit barrier @error.f:107 | 0.0001 | | | * MPI_Reduce | 0.0000 | | * MPI_Reduce | 0.0000 | | * print_results_ | 0.0000 | | * MPI_Finalize

!!TODO More description can be added with example usage.!! With the tool cube_test one can perform various test on .cubex file such as range test on metric values and similar. This is used usually in the correctness tests of Score-P and SCALASCA.

, where n is the number of CUBE files, i-th file and V is value of metric If "tree" is omitted, all nodes are checked.

, where n is the number of CUBE files, i-th file and V is value of metric If "tree" is omitted, all nodes are checked.  and remove all nodes from M. If tree is omitted, nodes are removed from the default tree ALL.

and remove all nodes from M. If tree is omitted, nodes are removed from the default tree ALL. WARNING: The order of the options matters.

With this tool user can convert all regions into canonical form, names into lower case, removing lines and filenames, in order to be able to compare measurements results.

Here is an example of execution

cube_canonize -p -m 10 -c -f -l profile.cubex canonized.cubex

cube_dump -w profile.cubex

....

-------------------------- LIST OF REGIONS --------------------------

MEASUREMENT OFF:MEASUREMENT OFF ( id=0, -1, -1, paradigm=user, role=artificial, url=, descr=, mod=)

TRACE BUFFER FLUSH:TRACE BUFFER FLUSH ( id=1, -1, -1, paradigm=measurement, role=artificial, url=, descr=, mod=)

THREADS:THREADS ( id=2, -1, -1, paradigm=measurement, role=artificial, url=, descr=, mod=THREADS)

MPI_Accumulate:MPI_Accumulate ( id=3, -1, -1, paradigm=mpi, role=atomic, url=, descr=, mod=MPI)

...