Configuration for Jülich Storage Cluster (JUST)

Copyright - FZ Jülich

The configuration of the Jülich Storage Cluster (JUST) is continuously evolving and expanding to integrate newly available storage technology, in order to fulfill the evergrowing capacity and I/O bandwidth demands of the data-intense simulations and learning applications on the supercomputers. Currently the 6th generation of JUST consists of 11 IBM SSS6000 systems and 2 IBM ESS3500 systems and represents the Large Capacity Storace Tier (LCST).

The software layer of the storage cluster is based on Storage Scale (GPFS) from IBM. JUST provides in total a gross capacity of approximately 200 PB.

The lowest storage layer and the backup of user data is stored on tape technology.

For details see

JUST numbers

JUST-HOME |

JUST-DATA |

JUST-ARCHIVE |

XCST (deprecated) |

JUST-TSM |

JUPHORIA |

JUST Total |

|

|---|---|---|---|---|---|---|---|

Capacity |

14 PB gross ca. 10 PB net |

126 PB gross ca. 90 PB net |

14 PB gross ca. 10 PB net |

45 PB gross ca. 32 PB net |

9.5 PB gross ca. 6.8 PB net |

6.0 PB HDD + 1.7 NVMe gross ca. 2.0 PB HDD + 0.6 PB NVMe net |

216.2 PB gross 151.4 PB net |

Storage Systems |

1 SSS6000 + 1 ESS3500 |

9 SSS6000 |

1 SSS6000 |

3 Lenovo DE6000 based |

2 DSS-G 240 + 1 ESS 3500 |

IBM Storage Ready Ceph |

|

Racks |

1+ |

9+ |

1+ |

6 |

3 |

3+ |

23 |

Server |

2x2 Storage Server 3 GPFS Manager 3 Backup Server 1 Monitoring Server 2 NFS Server |

9x2 Storage Server 3 GPFS Manager 1 Backup Server 1 Monitoring Server

|

1x2 Storage Server 1 GPFS Manager 1 Backup Server 1 Monitoring Server 1 NFS Server |

3x2 Storage Server 1 GPFS Manager 1 Backup Server 2 NFS Server |

3x2 Storage Server 7 Backup Server 1 Monitoring Server |

9 IBM Storage Ready with NVMe 15 IBM Storage Ready with HDDs 4 Service Nodes with HDDs |

96 |

Disks |

637 HDD + 24 NVMe |

5733 HDD |

637 HDD |

3120 HDD |

668 HDD + 24 NVMe |

360 HDD + 292 NVMe |

14776 + 340 NVMe |

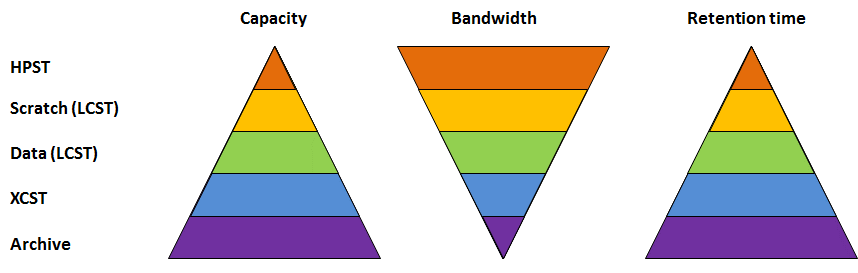

JUST Tiered Storage

Copyright - FZ Jülich

JUST provides different types of storage repositories to fit various use cases for user data and their work flows.

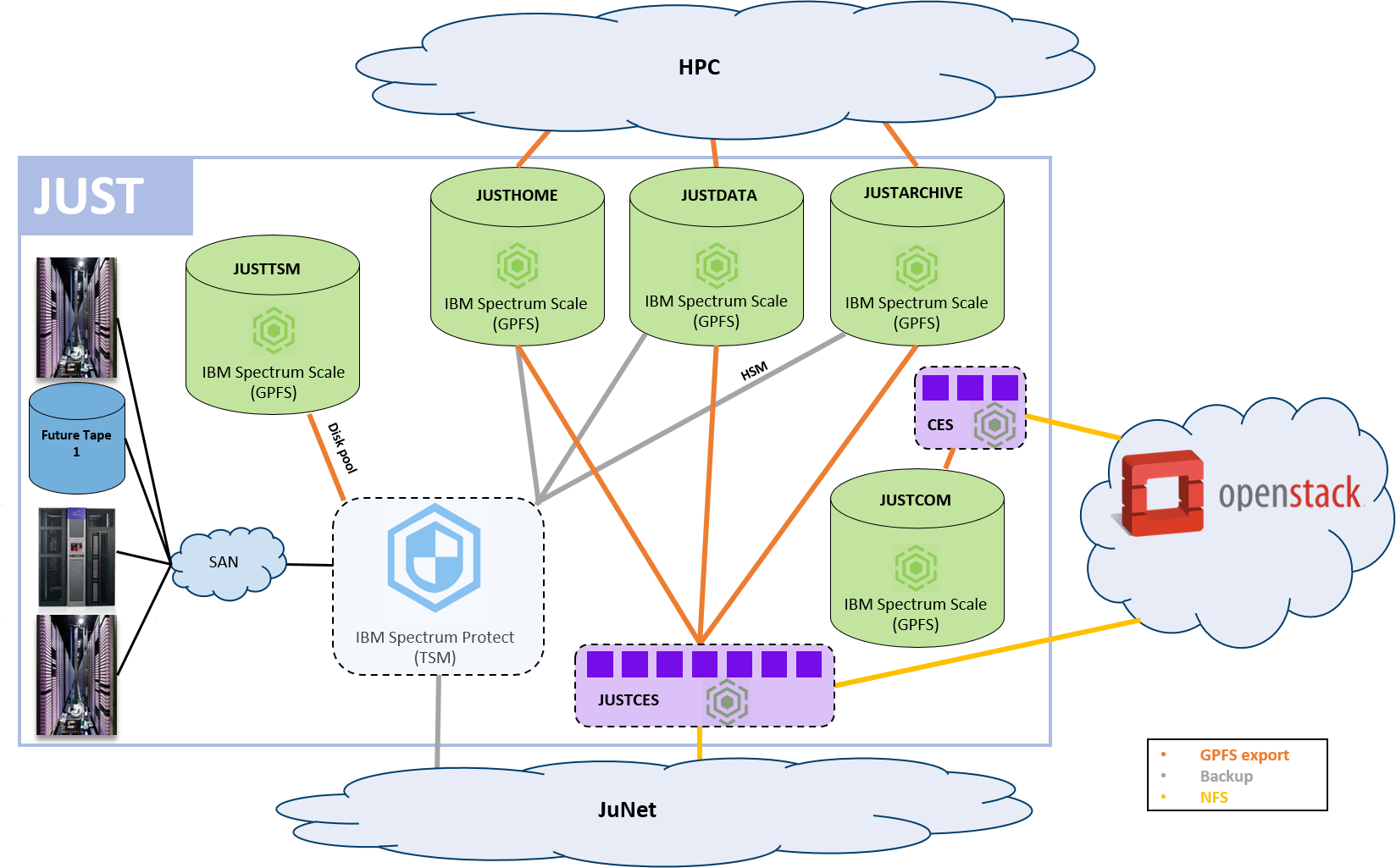

JUST Architecture

Copyright - FZ Jülich

JUST Hardware Characteristics

JUST6 Hardware (LCST)

- IBM SSS6000 (Capacity Model)

- Active-active dual controller storage system

each Dual socket AMD EPYC™ Genoa CPUs, 48 cores, 2.75 GHz, 1536 GB Memory

each 4 x DUAL-Port SAS 24Gb HBA

each 2 x NVIDIA ConnectX-7 NDR200 dual Port (4x 100GE)

software: RedHat Enterprise Linux, IBM Storage Scale System (Storage Scale + GPFS Native RAID)

- 7 x IBM Storage Enclosure Type 5149 (JBODs)

each one drawer with 41 slots

each 91 x 22 TB NL-SAS Disks

- IBM ESS3500 (NVMe)

- Active-active dual controller storage system

each Dual socket AMD EPYC 7642 CPUs, 48 cores, 2.3 GHz, 512 GB Memory

each 4 x NVIDIA ConnectX-7 NDR200 dual Port (4x 100GE used)

software: RedHat Enterprise Linux, IBM Storage Scale System (Storage Scale + GPFS Native RAID)

24x 15.36 TB PCI-4 NVMe

- IBM Monitoring/Deployment Server (ESS Management Server)

1 x AMD Epyc 7313 16 Cores; 3.0GHz, 512 GB Memory

1 x NVIDIA ConnectX-6 NDR200 dual Port (2x 100GE)

software: RedHat Enterprise Linux, IBM EMS software stack, IBM Storage Scale

- Megware GPFS Manager Server

1 x AMD Epyc 9334 CPU, 32 Cores, 2.7GHz, 384 GB Memory

1 x NVIDIA ConnectX-7 NDR200 dual Port (2x 100GE)

software: RedHat Enterprise Linux, IBM Storage Scale

- Megware Backup Server

1x AMD Epyc 9454P CPU, 48 Cores, 2.75GHz, 576 GB Memory

1 x NVIDIA ConnectX-7 NDR200 dual Port (2x 100GE)

2 x dual Port FC HBA 32Gb/s

software: RedHat Enterprise Linux, IBM Storage Scale, IBM Storage Protect

- Megware GPFS Manager Server

1 x AMD Epyc 9334 CPU, 32 Cores, 2.7GHz, 384 GB Memory

1 x NVIDIA ConnectX-7 NDR200 dual Port (2x 100GE)

software: RedHat Enterprise Linux, IBM Storage Scale, Cluster Export Service (Ganesha NFS)

- JUST/ExaSTORE Cluster Management

- 3 Master Nodes

each 1 x AMD EPYC™ 9534 CPU, 64 cores, 2.45 GHz, 384 GB Memory

each 1 x NVIDIA ConnectX-7 NDR200 dual Port (2x 100GE)

each 3 x 7.68TB NVMe for Ceph storage

software: RedHat Enterprise Linux, IBM Ceph

Extended Capacity Storage TIER (XCST) Hardware

- 1 x GPFS building block (Phase 3 - since Q3 2019)

- each 2 x Intel Xeon Gold 6142, 16 cores, 2.6 GHz, 384 GB Memory

each 4 x Quad-Port SAS 12Gb HBA

each 2 x Mellanox Dual-Port SFP + 100 Gigabit Ethernet Adapter

software: RedHat Enterprise Linux, Spectrum Scale (GPFS)

- each 4 x DE6000 storage system

each 4 x DE600 Enclosures

each 260 x 12 TB NL-SAS Disks

each 12 PB gross

User Data and Metadata (

$LARGEDATA)

- 1 x GPFS building block (Phase 4 - since Q3 2020)

- each 2 x Intel Xeon Gold 6142, 16 cores, 2.6 GHz, 384 GB Memory

each 4 x Quad-Port SAS 12Gb HBA

each 2 x Mellanox Dual-Port SFP + 100 Gigabit Ethernet Adapter

software: RedHat Enterprise Linux, Spectrum Scale (GPFS)

- each 4 x DE6000 storage system

each 4 x DE600 Enclosures

each 260 x 14 TB NL-SAS Disks

each 14 PB gross

User Data and Metadata (

$LARGEDATA)

- 1 x GPFS building block (Phase 5 - since Q4 2021)

- each 2 x Intel Xeon Gold 6226R, 16 cores, 2.9 GHz, 384 GB Memory

each 4 x Quad-Port SAS 12Gb HBA

each 2 x Mellanox Dual-Port SFP + 100 Gigabit Ethernet Adapter

software: RedHat Enterprise Linux, Spectrum Scale (GPFS)

- each 4 x DE6000 storage system

each 4 x DE600 Enclosures

each 260 x 14 TB NL-SAS Disks

each 14 PB gross

User Data and Metadata (

$LARGEDATA)

- 1 x IBM Power S822 for GPFS Cluster Export Service (CES)

2 x Power8 Processor, 12 cores, 3,026 GHz, 512 GB Memory

3 x Dual-Port 100Gigabit Ethernet

3 x LPAR to run virtual node

software: RedHat Enterprise Linux, Spectrum Scale (GPFS), Cluster Export Service - Object

JUPHORIA Hardware

- 15 x IBM Storage Ready Nodes with HDDs

IBM Storage Ready node based on DELL R760xd2

each 2 x Intel Xeon Silver 4416+ 20C 165W 2.0GHz Processor

each 16 x 16 GB RDIMM (256 GB)

each 2x M.2 480GB (for the OS)

each 24 x 16TB SATA HDD

each 4 x 3,84 TB NVMe

each 1 x Intel Dual Port 100 Gigabit Ethernet Adapter

accumulated bandwidth: 40.2 GB/s read, 28.6 GB/s write

- 9 x IBM Storage Ready Nodes with NVMe

IBM Storage Ready node based on DELL R760XL

each 2 x Intel Xeon Gold 6438N 32C 205W 2.0GHz Processor

each 16 x 32 GB RDIMM (512 GB)

each 2x M.2 480GB (for the OS)

each 24 x 7,68 TB NVMe

each 2 x Mellanox Dual-Port ConnectX-6 100 Gigabit Ethernet Adapter

accumulated bandwidth: 223 GB/s read, 75 GB/s write

- 4 x IBM Storage Ready Nodes with HDDs

IBM Storage Ready node based on DELL R760xd2

each 2 x Intel Xeon Silver 4416+ 20C 165W 2.0GHz Processor

each 16 x 16 GB RDIMM (256 GB)

each 2x M.2 480GB (for the OS)

each 8 x 8TB SATA HDD

each 4 x 3,84 TB NVMe

each 1 x Mellanox Dual-Port ConnectX-6 100 Gigabit Ethernet Adapter

software: RedHat Enterprise Linux, IBM Ceph

6.0 PB HDD + 1.7 NVMe gross, 2.0 PB HDD + 0.6 PB NVMe net (3x replication)

Block devices for JSC Cloud VMs

JUST-TSM Storage Hardware

- 2 x DSSS 240 (16 TB)

- each 2 x Lenovo ThinkSystem SR650 Modell 7X06

each 2 x Intel Xeon Gold 6240, 18 core, 2.6GHz, 384 GB Memory

each 4 x Quad-Port SAS 12Gb HBA

each 2 x Mellanox Dual-Port ConnectX-6 100 Gigabit Ethernet Adapter

software: RedHat Enterprise Linux, DSSG (Spectrum Scale + GPFS Native RAID)

- each 4 x DSS-Storage (JBODs)

each 2 x drawers with 42 slots

each 84 x 16 TB NL-SAS Disks

1 DSS-Storage with 2 x 800 GB SSD (GPFS-GNR Configuration and Logging)

each 334 NL-SAS Disks and 2 SSDs

each 5.3 PB gross, 3.9 PB net (8+3P)

ISP storage disk pools and ISP logs

JUST Tape Libraries (Automated Cartridge Systems)

Tape libraries are the most cost-efficient technology in terms of TCO and capacity, but with the drawback of a very high latency. They are designed to store cold data, which will be read seldomly or possibly required only for backup.

We use it in our storage hierarchy for three central services.

Backup and Restore of data

Long term archival of data

- Migration of active (online) data to less expensive storage media

User data in the GPFS archive file systems of the HPC-systems are automatically migrated to tape by the HSM (Hierarchical Storage Manager) component of ISP (IBM Storage Protect). The selection criteria for the migration are the age and size of a file. The user data will be recalled automatically and transparently for the user when they are accessed.

Project related data in the dCache-System are automatically migrated to tape by the ISP-HSM API interface of the Pool servers. The data will be recalled automatically and transparently for the user when they are accessed.

Users of the HPC systems, workstations and PCs have access to their data (Backup, Archive, Migration) in the libraries during 24 hours a day and 7 days a week.

Total Numbers

Actual capacity |

~ 478 PB |

Tapes |

~ 43.000 |

Tape drives |

102 |

Libraries |

3 (at 2 different buildings) |

Hardware Characteristics of the Tape Libraries Complex

- 1 STK Streamline SL8500

- Actual capacity: ~ 85 PB

6800 cartridges T10000T2, each 8 - 8.5 TB with T10000D

2000 cartridges LTO7M8, each 9 TB (LTO8)

800 cartridges LTO8, each 12 TB

Tape Slots: 10000

- Tape drives: 38

20 x T10000D

18 x LTO8

- Transfer rate:

T10000D: up to 240 MB/sec

LTO8: up to 300 MB/sec

- 1 TS 4500

- Actual capacity: ~ 174 PB

19372 cartridges LTO7M8, each 9 TB with LTO8

Tape Slots: 21386

- Tape drives: 32

32 x LTO8

- Transfer rate:

LTO8: up to 300 MB/sec

- 1 TS 4500

- Actual capacity: ~ 218 PB

5010 cartridges LTO8, each 12 TB

8775 cartridges LTO9, each 18 TB

Tape Slots: 15.844 (licensed)

- Tape drives: 32

32 x LTO9

- Transfer rate:

LTO9: up to 400 MB/sec

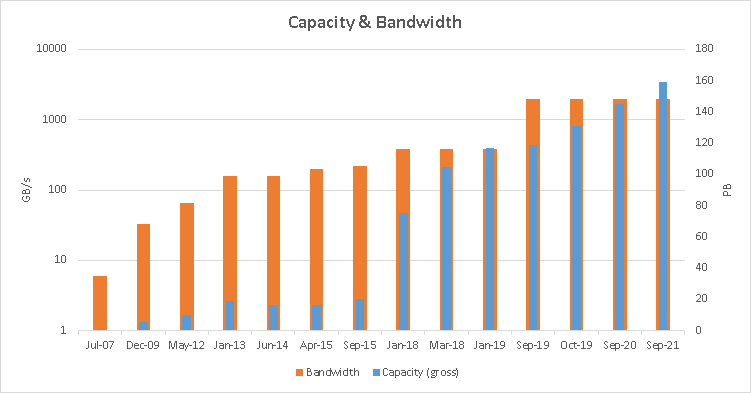

JUST History and Roadmap (for disk based part)

In 2007 JUST started with classical storage building blocks consisting of IBM Power5 servers running AIX and storage controllers with FC and SATA disks like IBM DS4800, DS4700, and DCS9550 and 1 PB gross capacity with a total bandwidth of 6-7 GB/s.

The next milestones were in 2009 starting in March with the replacement of the servers by Power6 systems and in December followed by migration to new generation of storage controllers and disks with IBM DS5300. The capacity grew to 5 PB gross and the bandwidth was about 33 GB/s.

In 2012 additional IBM x-Series servers running Linux and IBM DS3512 and DCS3700 storage controllers with SAS and NL-SAS disks were installed and all data beside the fast scratch file system were migrated to the new technology. The free Power6 servers and storage were added to the scratch file system pushing the bandwidth to 66 GB/s and increasing overall capacity to 10 PB.

In January 2013 the installation and test of about 9 PB gross GSS-24 systems running the pre-GA GSS 1.0 version (with the new GPFS Native RAID feature) started. Mid September 2013 a new generally available fast scratch file system was introduced. At the same time a new special file system dedicated to selected large projects with big data demands was made available. The overall JUST storage capacity was 13 PB and a bandwidth of 160 GB/s could be achieved.

In June 2014 additional 2.8 PB (gross) GSS storage was installed and used for migration of the classical $HOME file systems

into GNR based file systems. The JUST storage capacity grows to about 16 PB (gross).

In December 2014 it was decided to transfer the remaining classical storage components to GSS-24 systems by reusing the storage infrastucture combined with new x-Series servers. This was done step by step and finished in March 2015. At the end free storage was added to the fast scratch and big data file system increases the bandwidth to about 200 GB/s. At that time JUST consisted of 31 GPFS Storage Server systems (GSS) with a capacity of 16 PB gross.

In June 2015 a global I/O reconfiguration took place to support the new HPC-system JURECA. In all storage servers the 2 times 30 GB Ethernet channels were split into 3 times 20 GB Ethernet channels which were distributed over three I/O switches. This also implied recabling. Mid 2015 additional 4 PB (gross) were installed by two capacity optimized GSS-26 storage servers. They were partially used for migration of the HPC archive file systems. The thereby freed storage was added to the fast scratch and big data file systems which increased their capacity by 25% and the I/O bandwidth to 220 GB/s .The overall capacity was 20 PB gross.

In April 2018 the 5th generation of JUST entered production. The old GSS hardware was replaced by new Lenovo Distributed Storage Solution (DSS) systems. The software setup is the same as in JUST4: the parallel file system is based on Spectrum Scale (GPFS) in combination with the GPFS Native RAID (GNR) technology from IBM. This installation provided 75 PB gross capacity.

Two months later the storage cluster JUST-DATA started production which realized a large disk based capacity (40 PB gross) and a moderate bandwidth of 20 GB/s. To match the growing data requirements yearly 12-28 PB will be added. In January 2019 we installed additional 12 PB followed by another 12 TB in September 2019.

In Q3/2020 the JUST-HPST started production limited to selected project for testing. At the same time JUST-DATA was extended by 14 PB.

The 4th Tape Library (IBM TS4500) started production end of H1/2021.

The initial object store service started in Q4/2021 available on JUDAC.

In October 2021 the last phase of the JUST-DATA was installed with the additional capacity of 14PB.

Start data migration from the small Oracle STK Streamline SL8500 to the IBM TS400 (Q3/2023).

HPST reached end of life and was removed from the compute systems in Q1/2024.

The 6th generation of JUST was installed in H1/2024 mainly based on the IBM SSS6000 Storage Scale solution (GPFS Native RAID). In total 250 PB gross capacity is available. Major design changes were performed, including cluster splitting and replacing AIX/Power hardware by Linux/x86 in the TSM/Storage Protect context (client&server). GPFS-AFM and mpifileutil were used for the data migration.

Phase 1 and 2 of the XCST were removed from production end of Q2/2024

Dismantling the small Oracle STK Streamline SL8500 began in Q3/2024.