Performance properties

Time

- Description:

-

Total time spent for program execution including the idle times of CPUs

reserved for worker threads during OpenMP sequential execution. This

pattern assumes that every thread of a process allocated a separate CPU

during the entire runtime of the process. Executions in a time-shared environment

will also include time slices used by other processes. Over-subscription

of processor cores (e.g., exploiting hardware threads) will also manifest

as additional CPU allocation time.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree hierarchy to break down total time into

constituent parts which will help determine how much of it is due to

local/serial computation versus MPI, OpenMP, or POSIX thread parallelization

costs, and how much of that time is wasted waiting for other processes

or threads due to ineffective load balance or due to insufficient

parallelism.

-

Expand the call tree to identify important callpaths and routines where

most time is spent, and examine the times for each process or thread to

locate load imbalance.

- Parent metric:

- None

- Sub-metrics:

-

Execution Time

Overhead Time

OpenMP Idle Threads Time

Visits

- Description:

-

Number of times a call path has been visited. Visit counts for MPI

routine call paths directly relate to the number of MPI MPI Communication Operations and

MPI Synchronization Operations. Visit counts for OpenMP operations and parallel regions

(loops) directly relate to the number of times they were executed.

Routines which were not instrumented, or were filtered during measurement,

do not appear on recorded call paths. Similarly, routines are not shown

if the compiler optimizer successfully in-lined them prior to automatic

instrumentation.

- Unit:

- Counts

- Diagnosis:

-

Call paths that are frequently visited (and thereby have high exclusive

Visit counts) can be expected to have an important role in application

execution performance (e.g., Execution Time). Very frequently executed

routines, which are relatively short and quick to execute, may have an

adverse impact on measurement quality. This can be due to

instrumentation preventing in-lining and other compiler optimizations

and/or overheads associated with measurement such as reading timers and

hardware counters on routine entry and exit. When such routines consist

solely of local/sequential computation (i.e., neither communication nor

synchronization), they should be eliminated to improve the quality of

the parallel measurement and analysis. One approach is to specify the

names of such routines in a filter file for subsequent

measurements to ignore, and thereby considerably reduce their

measurement impact. Alternatively, selective instrumentation

can be employed to entirely avoid instrumenting such routines and

thereby remove all measurement impact. In both cases, uninstrumented

and filtered routines will not appear in the measurement and analysis,

much as if they had been "in-lined" into their calling routine.

- Parent metric:

- None

- Sub-metrics:

-

None

Execution Time

(only available after remapping)

- Description:

-

Time spent on program execution but without the idle times of worker

threads during OpenMP sequential execution and time spent on tasks

related to trace generation. Includes time blocked in system calls

(e.g., waiting for I/O to complete) and processor stalls (e.g.,

memory accesses).

- Unit:

- Seconds

- Diagnosis:

-

A low fraction of execution time indicates a suboptimal measurement

configuration leading to trace buffer flushes (see Overhead Time) or

inefficient usage of the available hardware resources (see

OpenMP Idle Threads Time).

- Parent metric:

- Time

- Sub-metrics:

-

Computation Time

MPI Time

OpenMP Time

POSIX Threads Time

OpenACC Time

OpenCL Time

CUDA Time

HIP Time

Overhead Time

(only available after remapping)

- Description:

-

Time spent performing major tasks related to measurement, such as

creation of the experiment archive directory, clock synchronization, or

dumping trace buffer contents to a file. Note that normal per-event

overheads – such as event acquisition, reading timers and

hardware counters, runtime call-path summarization, and storage in trace

buffers – is not included.

- Unit:

- Seconds

- Diagnosis:

-

Significant measurement overheads are typically incurred when

measurement is initialized (e.g., in the program main routine

or MPI_Init) and finalized (e.g., in MPI_Finalize),

and are generally unavoidable. While they extend the total (wallclock)

time for measurement, when they occur before parallel execution starts

or after it completes, the quality of measurement of the parallel

execution is not degraded. Trace file writing overhead time can be

kept to a minimum by specifying an efficient parallel filesystem (when

provided) for the experiment archive (e.g.,

SCOREP_EXPERIMENT_DIRECTORY=/work/mydir).

-

When measurement overhead is reported for other call paths, especially

during parallel execution, measurement perturbation is considerable and

interpretation of the resulting analysis much more difficult. A common

cause of measurement overhead during parallel execution is the flushing

of full trace buffers to disk: warnings issued by the measurement

system indicate when this occurs. When flushing occurs simultaneously

for all processes and threads, the associated perturbation is

localized in time. More usually, buffer filling and flushing occurs

independently at different times on each process/thread and the

resulting perturbation is extremely disruptive, often forming a

catastrophic chain reaction. It is highly advisable to avoid

intermediate trace buffer flushes by appropriate instrumentation and

measurement configuration, such as specifying a filter file

listing purely computational routines (classified as type USR by

scorep-score -r ) or an adequate trace buffer size

(SCOREP_TOTAL_MEMORY larger than max_buf reported by

scorep-score). If the maximum trace buffer capacity requirement

remains too large for a full-size measurement, it may be necessary to

configure the subject application with a smaller problem size or to

perform fewer iterations/timesteps to shorten the measurement (and

thereby reduce the size of the trace).

- Parent metric:

- Time

- Sub-metrics:

-

None

Computation Time

(only available after remapping)

- Description:

-

Time spent in computational parts of the application, excluding

communication and synchronization overheads of parallelization

libaries/language extensions such as MPI, OpenMP, or POSIX threads.

- Unit:

- Seconds

- Diagnosis:

-

Expand the call tree to determine important callpaths and routines

where most computation time is spent, and examine the time for

each process or thread on those callpaths looking for significant

variations which might indicate the origin of load imbalance.

-

Where computation time on each process/thread is unexpectedly

slow, profiling with PAPI preset or platform-specific hardware counters

may help to understand the origin. Serial program profiling tools

(e.g., gprof) may also be helpful. Generally, compiler optimization

flags and optimized libraries should be investigated to improve serial

performance, and where necessary alternative algorithms employed.

- Parent metric:

- Execution Time

- Sub-metrics:

-

OpenMP Target Kernel Time

OpenCL Kernel Time

CUDA Kernel Time

HIP Kernel Time

MPI Time

(only available after remapping)

- Description:

-

Time spent in (instrumented) MPI calls. Note that depending on the

setting of the SCOREP_MPI_ENABLE_GROUPS environment variable,

certain classes of MPI calls may have been excluded from measurement and

therefore do not show up in the analysis report.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree to determine which classes of MPI operation

contribute the most time. Typically the remaining (exclusive) MPI Time,

corresponding to instrumented MPI routines that are not in one of the

child classes, will be negligible.

- Parent metric:

- Execution Time

- Sub-metrics:

-

MPI Management Time

MPI Synchronization Time

MPI Communication Time

MPI File I/O Time

MPI Management Time

(only available after remapping)

- Description:

-

Time spent in MPI calls related to management operations, such as MPI

initialization and finalization, opening/closing of files used for MPI

file I/O, or creation/deletion of various handles (e.g., communicators

or RMA windows).

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree to determine which classes of MPI management

operation contribute the most time. While some management costs are

unavoidable, others can be decreased by improving load balance or reusing

existing handles rather than repeatedly creating and deleting them.

- Parent metric:

- MPI Time

- Sub-metrics:

-

MPI Init/Finalize Time

MPI Communicator Management Time

MPI File Management Time

MPI Window Management Time

MPI Init/Finalize Time

(only available after remapping)

- Description:

-

Time spent in MPI calls regarding initialization and finalization, i.e.,

MPI_Init or MPI_Init_thread and MPI_Finalize

(world model), as well as MPI_Session_init and

MPI_Session_finalize (sessions model). Also covered are

related query functions.

- Unit:

- Seconds

- Diagnosis:

-

These are unavoidable one-off costs for MPI parallel programs, which

can be expected to increase for larger numbers of processes. Some

applications may not use all of the processes provided (or not use some

of them for the entire execution), such that unused and wasted

processes wait in MPI_Finalize for the others to finish. If

the proportion of time in these calls is significant, it is probably

more effective to use a smaller number of processes (or a larger amount

of computation).

- Parent metric:

- MPI Management Time

- Sub-metrics:

-

MPI Initialization Completion Time

Wait at MPI Finalize Time

MPI Initialization Completion Time

- Description:

-

Time spent in MPI initialization after the first process has left the

operation.

-

- Unit:

- Seconds

- Diagnosis:

-

Generally all processes can be expected to leave MPI initialization

simultaneously, and any significant initialization completion time may

indicate an inefficient MPI implementation or interference from other

processes running on the same compute resources.

- Parent metric:

- MPI Init/Finalize Time

- Sub-metrics:

-

None

Wait at MPI Finalize Time

- Description:

-

Time spent waiting in front of MPI finalization, which is the time

inside MPI_Finalize until the last processes has reached

finalization.

-

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time at finalization can be an indication of load

imbalance. Examine the waiting times for each process and try to

distribute the preceding computation from processes with the shortest

waiting times to those with the longest waiting times.

- Parent metric:

- MPI Init/Finalize Time

- Sub-metrics:

-

None

MPI Communicator Management Time

(only available after remapping)

- Description:

-

Time spent in MPI Communicator management routines such as creating and

freeing communicators, Cartesian and graph topologies, and getting or

setting communicator attributes.

- Unit:

- Seconds

- Diagnosis:

-

There can be significant time in collective operations such as

MPI_Comm_create, MPI_Comm_free and

MPI_Cart_create that are considered neither explicit

synchronization nor communication, but result in implicit barrier

synchronization of participating processes. Avoidable waiting time

for these operations will be reduced if all processes execute them

simultaneously. If these are repeated operations, e.g., in a loop,

it is worth investigating whether their frequency can be reduced by

re-use.

- Parent metric:

- MPI Management Time

- Sub-metrics:

-

None

MPI File Management Time

(only available after remapping)

- Description:

-

Time spent in MPI file management routines such as opening, closing,

deleting, or resizing files, seeking, syncing, and setting or retrieving

file parameters or the process's view of the data in the file.

- Unit:

- Seconds

- Diagnosis:

-

Collective file management calls (see MPI Collective File Operations) may suffer from

wait states due to load imbalance. Examine the times spent in collective

management routines for each process and try to distribute the preceding

computation from processes with the shortest times to those with the

longest times.

- Parent metric:

- MPI Management Time

- Sub-metrics:

-

None

MPI Window Management Time

(only available after remapping)

- Description:

-

Time spent in MPI window management routines such as creating and freeing

memory windows and getting or setting window attributes.

- Unit:

- Seconds

- Parent metric:

- MPI Management Time

- Sub-metrics:

-

MPI Wait at Window Create Time

MPI Wait at Window Free Time

MPI Synchronization Time

(only available after remapping)

- Description:

-

Time spent in MPI explicit synchronization calls, such as barriers and

remote memory access window synchronization. Time in point-to-point

message transfers with no payload data used for coordination is currently

part of MPI Point-to-point Communication Time.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree further to determine the proportion of time in

different classes of MPI synchronization operations. Expand the

calltree to identify which callpaths are responsible for the most

synchronization time. Also examine the distribution of synchronization

time on each participating process for indication of load imbalance in

preceding code.

- Parent metric:

- MPI Time

- Sub-metrics:

-

MPI Collective Synchronization Time

MPI One-sided Synchronization Time

MPI Collective Synchronization Time

(only available after remapping)

- Description:

-

Total time spent in MPI barriers.

- Unit:

- Seconds

- Diagnosis:

-

When the time for MPI explicit barrier synchronization is significant,

expand the call tree to determine which MPI_Barrier calls are

responsible, and compare with their Visits count to see how

frequently they were executed. Barrier synchronizations which are not

necessary for correctness should be removed. It may also be appropriate

to use a communicator containing fewer processes, or a number of

point-to-point messages for coordination instead. Also examine the

distribution of time on each participating process for indication of

load imbalance in preceding code.

- Parent metric:

- MPI Synchronization Time

- Sub-metrics:

-

Wait at MPI Barrier Time

MPI Barrier Completion Time

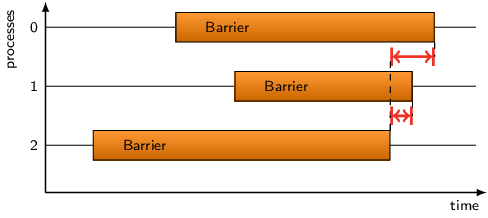

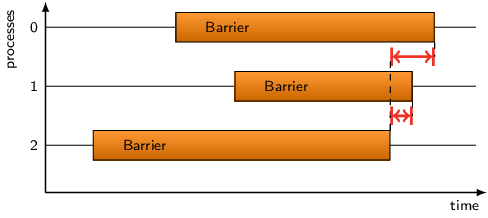

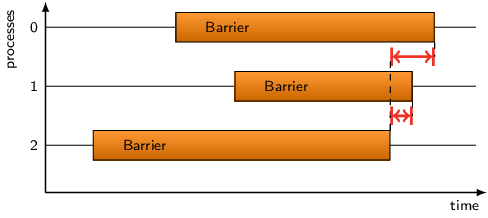

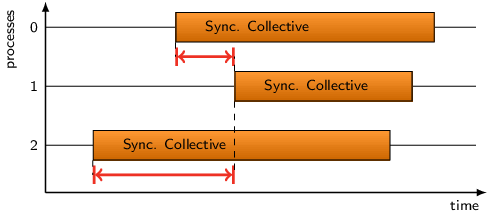

Wait at MPI Barrier Time

- Description:

-

Time spent waiting in front of an MPI barrier, which is the time inside

the barrier call until the last processes has reached the barrier.

-

-

Note that Scalasca does not yet analyze non-blocking barriers introduced

with MPI v3.0.

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time at barriers can be an indication of load

imbalance. Examine the waiting times for each process and try to

distribute the preceding computation from processes with the shortest

waiting times to those with the longest waiting times.

- Parent metric:

- MPI Collective Synchronization Time

- Sub-metrics:

-

None

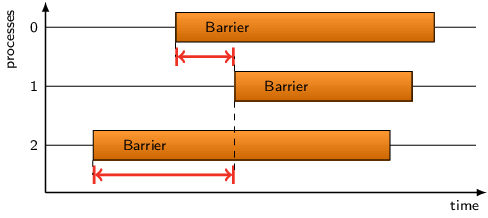

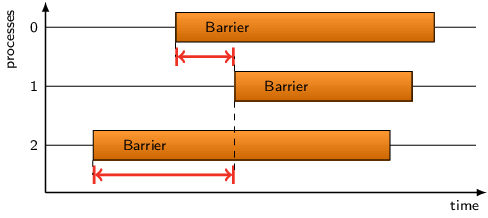

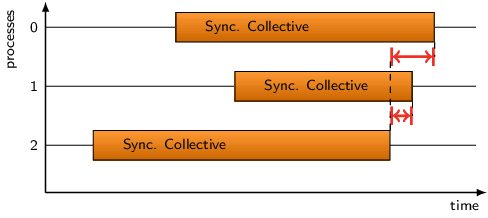

MPI Barrier Completion Time

- Description:

-

Time spent in MPI barriers after the first process has left the operation.

-

-

Note that Scalasca does not yet analyze non-blocking barriers introduced

with MPI v3.0.

- Unit:

- Seconds

- Diagnosis:

-

Generally all processes can be expected to leave MPI barriers

simultaneously, and any significant barrier completion time may

indicate an inefficient MPI implementation or interference from other

processes running on the same compute resources.

- Parent metric:

- MPI Collective Synchronization Time

- Sub-metrics:

-

None

MPI Communication Time

(only available after remapping)

- Description:

-

Time spent in MPI communication calls, including point-to-point,

collective, and one-sided communication.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree further to determine the proportion of time in

different classes of MPI communication operations. Expand the calltree

to identify which callpaths are responsible for the most communication

time. Also examine the distribution of communication time on each

participating process for indication of communication imbalance or load

imbalance in preceding code.

- Parent metric:

- MPI Time

- Sub-metrics:

-

MPI Point-to-point Communication Time

MPI Collective Communication Time

MPI One-sided Communication Time

MPI Point-to-point Communication Time

(only available after remapping)

- Description:

-

Total time spent in MPI point-to-point communication calls. Note that

this is only the respective times for the sending and receiving calls,

and not message transmission time.

- Unit:

- Seconds

- Diagnosis:

-

Investigate whether communication time is commensurate with the number

of MPI Communication Operations and MPI Bytes Transferred. Consider replacing blocking

communication with non-blocking communication that can potentially be

overlapped with computation, or using persistent communication to

amortize message setup costs for common transfers. Also consider the

mapping of processes onto compute resources, especially if there are

notable differences in communication time for particular processes,

which might indicate longer/slower transmission routes or network

congestion.

- Parent metric:

- MPI Communication Time

- Sub-metrics:

-

MPI Late Sender Time

MPI Late Receiver Time

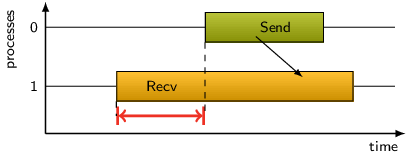

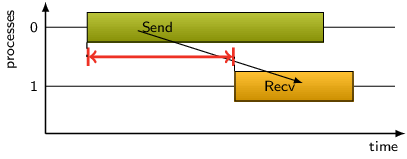

MPI Late Sender Time

- Description:

-

Refers to the time lost waiting caused by a blocking receive operation

(e.g., MPI_Recv or MPI_Wait) that is posted earlier

than the corresponding send operation.

-

-

If the receiving process is waiting for multiple messages to arrive

(e.g., in an call to MPI_Waitall), the maximum waiting time is

accounted, i.e., the waiting time due to the latest sender.

- Unit:

- Seconds

- Diagnosis:

-

Try to replace MPI_Recv with a non-blocking receive MPI_Irecv

that can be posted earlier, proceed concurrently with computation, and

complete with a wait operation after the message is expected to have been

sent. Try to post sends earlier, such that they are available when

receivers need them. Note that outstanding messages (i.e., sent before the

receiver is ready) will occupy internal message buffers, and that large

numbers of posted receive buffers will also introduce message management

overhead, therefore moderation is advisable.

- Parent metric:

- MPI Point-to-point Communication Time

- Sub-metrics:

-

MPI Late Sender, Wrong Order Time

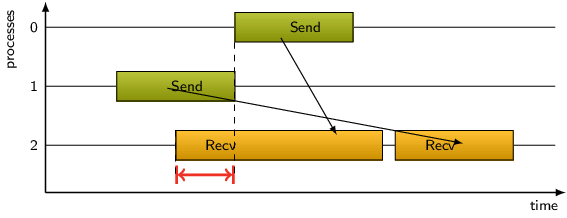

MPI Late Sender, Wrong Order Time

- Description:

-

A Late Sender situation may be the result of messages that are received

in the wrong order. If a process expects messages from one or more

processes in a certain order, although these processes are sending them

in a different order, the receiver may need to wait for a message if it

tries to receive a message early that has been sent late.

-

This pattern comes in two variants:

- The messages involved were sent from the same source location

- The messages involved were sent from different source locations

See the description of the corresponding specializations for more details.

- Unit:

- Seconds

- Diagnosis:

-

Check the proportion of MPI Point-to-point Receive Communication Operations that are MPI Late Sender Instances (Communications).

Swap the order of receiving from different sources to match the most

common ordering.

- Parent metric:

- MPI Late Sender Time

- Sub-metrics:

-

MPI Late Sender, Wrong Order Time / Different Sources

MPI Late Sender, Wrong Order Time / Same Source

MPI Late Sender, Wrong Order Time / Different Sources

- Description:

-

This specialization of the Late Sender, Wrong Order pattern refers

to wrong order situations due to messages received from different source

locations.

-

- Unit:

- Seconds

- Diagnosis:

-

Check the proportion of MPI Point-to-point Receive Communication Operations that are

MPI Late Sender, Wrong Order Instances (Communications). Swap the order of receiving from different

sources to match the most common ordering. Consider using the wildcard

MPI_ANY_SOURCE to receive (and process) messages as they

arrive from any source rank.

- Parent metric:

- MPI Late Sender, Wrong Order Time

- Sub-metrics:

-

None

MPI Late Sender, Wrong Order Time / Same Source

- Description:

-

This specialization of the Late Sender, Wrong Order pattern refers

to wrong order situations due to messages received from the same source

location.

-

- Unit:

- Seconds

- Diagnosis:

-

Swap the order of receiving to match the order messages are sent, or

swap the order of sending to match the order they are expected to be

received. Consider using the wildcard MPI_ANY_TAG to receive

(and process) messages in the order they arrive from the source.

- Parent metric:

- MPI Late Sender, Wrong Order Time

- Sub-metrics:

-

None

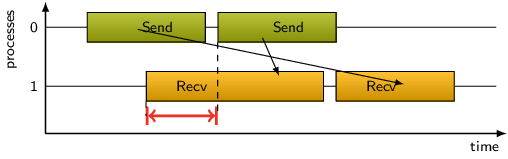

MPI Late Receiver Time

- Description:

-

A send operation may be blocked until the corresponding receive

operation is called. This pattern refers to the time spent waiting

as a result of this situation.

-

-

- Unit:

- Seconds

- Diagnosis:

-

Check the proportion of MPI Point-to-point Send Communication Operations that are MPI Late Receiver Instances (Communications).

The MPI implementation may be working in synchronous mode by default,

such that explicit use of asynchronous nonblocking sends can be tried.

If the size of the message to be sent exceeds the available MPI

internal buffer space then the operation will be blocked until the data

can be transferred to the receiver: some MPI implementations allow

larger internal buffers or different thresholds to be specified. Also

consider the mapping of processes onto compute resources, especially if

there are notable differences in communication time for particular

processes, which might indicate longer/slower transmission routes or

network congestion.

- Parent metric:

- MPI Point-to-point Communication Time

- Sub-metrics:

-

None

MPI Collective Communication Time

(only available after remapping)

- Description:

-

Total time spent in MPI collective communication calls.

- Unit:

- Seconds

- Diagnosis:

-

As the number of participating MPI processes

increase (i.e., ranks in MPI_COMM_WORLD or a subcommunicator),

time in collective communication can be expected to increase

correspondingly. Part of the increase will be due to additional data

transmission requirements, which are generally similar for all

participants. A significant part is typically time some (often many)

processes are blocked waiting for the last of the required participants

to reach the collective operation. This may be indicated by significant

variation in collective communication time across processes, but is

most conclusively quantified from the child metrics determinable via

automatic trace pattern analysis.

-

Since basic transmission cost per byte for collectives can be relatively high,

combining several collective operations of the same type each with small amounts of data

(e.g., a single value per rank) into fewer operations with larger payloads

using either a vector/array of values or aggregate datatype may be beneficial.

(Overdoing this and aggregating very large message payloads is counter-productive

due to explicit and implicit memory requirements, and MPI protocol switches

for messages larger than an eager transmission threshold.)

-

MPI implementations generally provide optimized collective communication operations,

however, in rare cases, it may be appropriate to replace a collective

communication operation provided by the MPI implementation with a

customized implementation of your own using point-to-point operations.

For example, certain MPI implementations of MPI_Scan include

unnecessary synchronization of all participating processes, or

asynchronous variants of collective operations may be preferable to

fully synchronous ones where they permit overlapping of computation.

- Parent metric:

- MPI Communication Time

- Sub-metrics:

-

MPI Early Reduce Time

MPI Early Scan Time

MPI Late Broadcast Time

MPI Wait at N x N Time

MPI N x N Completion Time

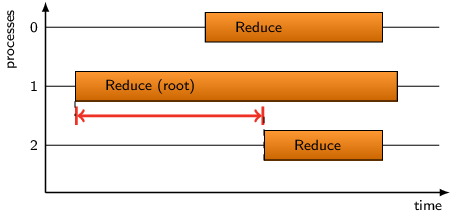

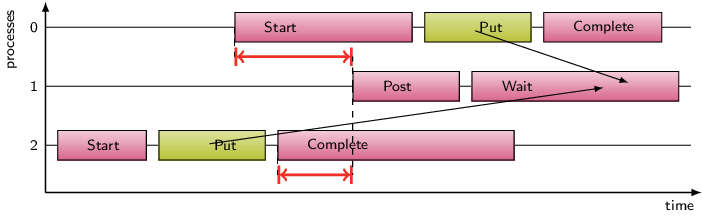

MPI Early Reduce Time

- Description:

-

Collective communication operations that send data from all processes

to one destination process (i.e., n-to-1) may suffer from waiting times

if the destination process enters the operation earlier than any of its

sending counterparts. This pattern refers to the time lost on the root

rank as a result of this situation, accounting for the waiting time due

to the latest sending process. It applies to the MPI calls

MPI_Reduce, MPI_Gather and MPI_Gatherv.

-

-

Note that Scalasca does not yet analyze non-blocking collectives introduced

with MPI v3.0.

- Unit:

- Seconds

- Parent metric:

- MPI Collective Communication Time

- Sub-metrics:

-

None

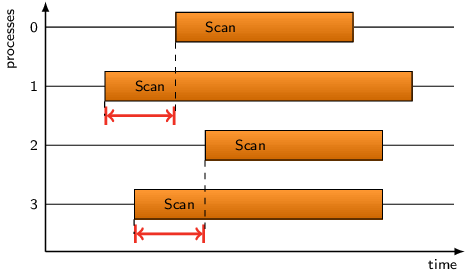

MPI Early Scan Time

- Description:

-

MPI_Scan or MPI_Exscan operations may suffer from

waiting times if the process with rank n enters the operation

earlier than its sending counterparts (i.e., ranks 0..n-1). This

pattern refers to the time lost as a result of this situation.

-

-

Note that Scalasca does not yet analyze non-blocking collectives introduced

with MPI v3.0.

- Unit:

- Seconds

- Parent metric:

- MPI Collective Communication Time

- Sub-metrics:

-

None

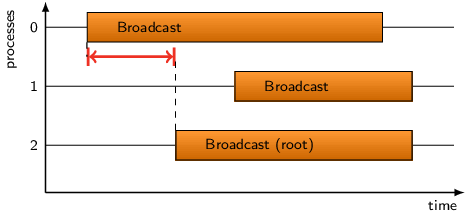

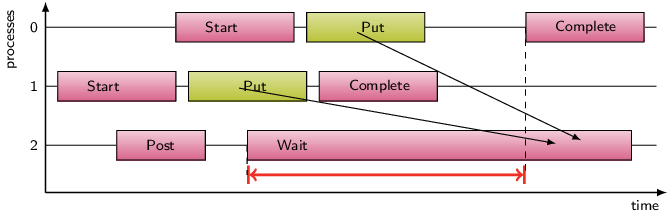

MPI Late Broadcast Time

- Description:

-

Collective communication operations that send data from one source

process to all processes (i.e., 1-to-n) may suffer from waiting times

if destination processes enter the operation earlier than the source

process, that is, before any data could have been sent. This pattern

refers to the time lost as a result of this situation. It applies to

the MPI calls MPI_Bcast, MPI_Scatter and

MPI_Scatterv.

-

-

Note that Scalasca does not yet analyze non-blocking collectives introduced

with MPI v3.0.

- Unit:

- Seconds

- Parent metric:

- MPI Collective Communication Time

- Sub-metrics:

-

None

MPI Wait at N x N Time

- Description:

-

Collective communication operations that send data from all processes

to all processes (i.e., n-to-n) exhibit an inherent synchronization

among all participants, that is, no process can finish the operation

until the last process has started it. This pattern covers the time

spent in n-to-n operations until all processes have reached it. It

applies to the MPI calls MPI_Reduce_scatter,

MPI_Reduce_scatter_block, MPI_Allgather,

MPI_Allgatherv, MPI_Allreduce and MPI_Alltoall.

-

-

Note that the time reported by this pattern is not necessarily

completely waiting time since some processes could – at least

theoretically – already communicate with each other while others

have not yet entered the operation.

-

Also note that Scalasca does not yet analyze non-blocking and neighborhood

collectives introduced with MPI v3.0.

- Unit:

- Seconds

- Parent metric:

- MPI Collective Communication Time

- Sub-metrics:

-

None

MPI N x N Completion Time

- Description:

-

This pattern refers to the time spent in MPI n-to-n collectives after

the first process has left the operation.

-

-

Note that the time reported by this pattern is not necessarily

completely waiting time since some processes could – at least

theoretically – still communicate with each other while others

have already finished communicating and exited the operation.

-

Also note that Scalasca does not yet analyze non-blocking and neighborhood

collectives introduced with MPI v3.0.

- Unit:

- Seconds

- Parent metric:

- MPI Collective Communication Time

- Sub-metrics:

-

None

MPI File I/O Time

(only available after remapping)

- Description:

-

Time spent in MPI file I/O calls.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree further to determine the proportion of time in

different classes of MPI file I/O operations. Expand the calltree to

identify which callpaths are responsible for the most file I/O time.

Also examine the distribution of MPI file I/O time on each process for

indication of load imbalance. Use a parallel filesystem (such as

/work) when possible, and check that appropriate hints values

have been associated with the MPI_Info object of MPI files.

- Parent metric:

- MPI Time

- Sub-metrics:

-

MPI Individual File I/O Time

MPI Collective File I/O Time

MPI Individual File I/O Time

(only available after remapping)

- Description:

-

Time spent in individual MPI file I/O calls.

- Unit:

- Seconds

- Diagnosis:

-

Expand the calltree to identify which callpaths are responsible for the

most individual file I/O time. When multiple processes read and write

to files, MPI collective file reads and writes can be more efficient.

Examine the number of MPI Individual File Read Operations and MPI Individual File Write Operations to

locate potential opportunities for collective I/O.

- Parent metric:

- MPI File I/O Time

- Sub-metrics:

-

None

MPI Collective File I/O Time

(only available after remapping)

- Description:

-

Time spent in collective MPI file I/O calls.

- Unit:

- Seconds

- Diagnosis:

-

Expand the calltree to identify which callpaths are responsible for the

most collective file I/O time. Examine the distribution of times on

each participating process for indication of imbalance in the operation

itself or in preceding code. Examine the number of MPI Collective File Read Operations

and MPI Collective File Write Operations done by each process as a possible origin of

imbalance. Where asychrony or imbalance prevents effective use of

collective file I/O, individual (i.e., non-collective) file I/O may be

preferable.

- Parent metric:

- MPI File I/O Time

- Sub-metrics:

-

None

OpenMP Target Kernel Time

(only available after remapping)

- Description:

-

Time spent executing OpenMP target kernels.

- Unit:

- Seconds

- Parent metric:

- Computation Time

- Sub-metrics:

-

None

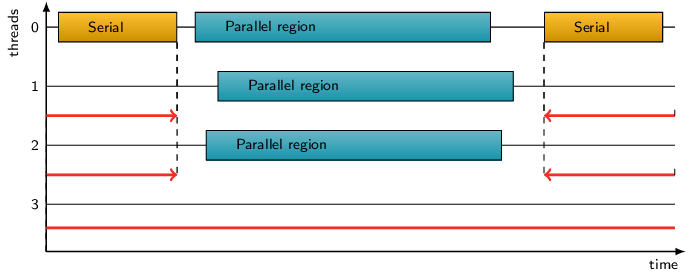

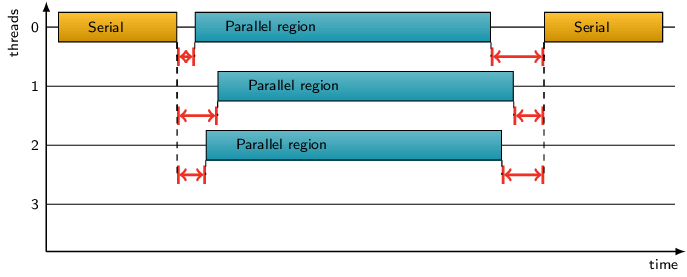

OpenMP Idle Threads Time

(only available after remapping)

- Description:

-

Idle time on CPUs that may be reserved for teams of threads when the

process is executing sequentially before and after OpenMP parallel

regions, or with less than the full team within OpenMP parallel

regions.

-

- Unit:

- Seconds

- Diagnosis:

-

On shared compute resources, unused threads may simply sleep and allow

the resources to be used by other applications, however, on dedicated

compute resources (or where unused threads busy-wait and thereby occupy

the resources) their idle time is charged to the application.

According to Amdahl's Law, the fraction of inherently serial execution

time limits the effectiveness of employing additional threads to reduce

the execution time of parallel regions. Where the Idle Threads Time is

significant, total Time (and wall-clock execution time) may be

reduced by effective parallelization of sections of code which execute

serially. Alternatively, the proportion of wasted Idle Threads Time

will be reduced by running with fewer threads, albeit resulting in a

longer wall-clock execution time but more effective usage of the

allocated compute resources.

- Parent metric:

- Time

- Sub-metrics:

-

OpenMP Limited Parallelism Time

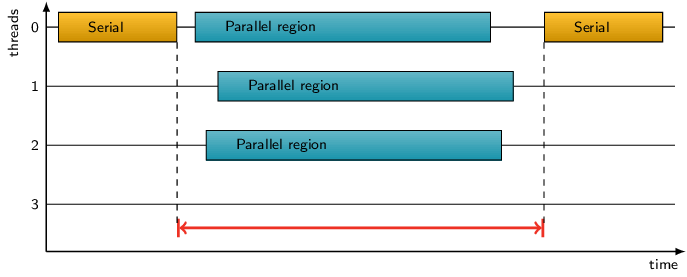

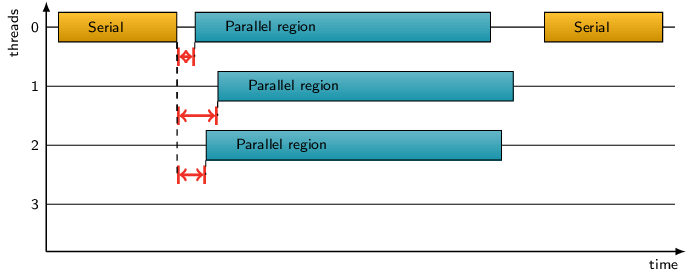

OpenMP Limited Parallelism Time

(only available after remapping)

- Description:

-

Idle time on CPUs that may be reserved for threads within OpenMP

parallel regions where not all of the thread team participates.

-

- Unit:

- Seconds

- Diagnosis:

-

Code sections marked as OpenMP parallel regions which are executed

serially (i.e., only by the master thread) or by less than the full

team of threads, can result in allocated but unused compute resources

being wasted. Typically this arises from insufficient work being

available within the marked parallel region to productively employ all

threads. This may be because the loop contains too few iterations or

the OpenMP runtime has determined that additional threads would not be

productive. Alternatively, the OpenMP omp_set_num_threads API

or num_threads or if clauses may have been explicitly

specified, e.g., to reduce parallel execution overheads such as

OpenMP Thread Management Time or OpenMP Synchronization Time. If the proportion of

OpenMP Limited Parallelism Time is significant, it may be more

efficient to run with fewer threads for that problem size.

- Parent metric:

- OpenMP Idle Threads Time

- Sub-metrics:

-

None

OpenMP Time

(only available after remapping)

- Description:

-

Time spent in OpenMP API calls and code generated by the OpenMP compiler.

In particular, this includes thread team management and synchronization

activities.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree to determine which classes of OpenMP activities

contribute the most time.

- Parent metric:

- Execution Time

- Sub-metrics:

-

OpenMP Thread Management Time

OpenMP Target Time

OpenMP Synchronization Time

OpenMP Flush Time

OpenMP Thread Management Time

- Description:

-

Time spent managing teams of threads, creating and initializing them

when forking a new parallel region and clearing up afterwards when

joining.

-

- Unit:

- Seconds

- Diagnosis:

-

Management overhead for an OpenMP parallel region depends on the number

of threads to be employed and the number of variables to be initialized

and saved for each thread, each time the parallel region is executed.

Typically a pool of threads is used by the OpenMP runtime system to

avoid forking and joining threads in each parallel region, however,

threads from the pool still need to be added to the team and assigned

tasks to perform according to the specified schedule. When the overhead

is a significant proportion of the time for executing the parallel

region, it is worth investigating whether several parallel regions can

be combined to amortize thread management overheads. Alternatively, it

may be appropriate to reduce the number of threads either for the

entire execution or only for this parallel region (e.g., via

num_threads or if clauses).

- Parent metric:

- OpenMP Time

- Sub-metrics:

-

OpenMP Thread Team Fork Time

OpenMP Thread Team Fork Time

- Description:

-

Time spent creating and initializing teams of threads.

-

- Unit:

- Seconds

- Parent metric:

- OpenMP Thread Management Time

- Sub-metrics:

-

None

OpenMP Target Time

(only available after remapping)

- Description:

-

Time spent in OpenMP target related API calls and target code generated

by the compiler. In particular, this includes memory management,

synchronization and kernel launches.

Note:

Some OpenMP runtimes handle the events in this metric tree

asynchronously to reduce overhead and more accurately describe the

runtime behavior. A dispatched event will then correspond to the

time it took to add the event to the target architectures native

event queue (e.g., a CUDA stream). Since some events require

synchronization first, this can lead to high measurement times in

an unexpected place (e.g., long times for data transfers induced

by kernel launches). Therefore, events have to be examined

carefully.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree to determine which classes of OpenMP target

activities contribute the most time.

- Parent metric:

- OpenMP Time

- Sub-metrics:

-

OpenMP Target Memory Management Time

OpenMP Target Kernel Launch Time

OpenMP Target Memory Management Time

(only available after remapping)

- Description:

-

Time spent queuing and waiting for data transfer operations to finish.

This includes direct transfers initiated by OpenMP API functions like

omp_target_memcpy, explicit transfers via OpenMP directives,

indirect transfers for kernels, and memory management operations, like

allocations.

- Unit:

- Seconds

- Diagnosis:

-

Excessive amounts of data transfer operations can significantly

increase the overall time spent due to induced overhead with each

operation. Try to reduce the amount of data transfer operations by

moving memory early, asynchronously and less often. Try to move as

much data as possible.

- Parent metric:

- OpenMP Target Time

- Sub-metrics:

-

None

OpenMP Target Kernel Launch Time

(only available after remapping)

- Description:

-

Time spent to launch OpenMP target kernels and wait for their

completion, if not asynchronous.

- Unit:

- Seconds

- Parent metric:

- OpenMP Target Time

- Sub-metrics:

-

None

OpenMP Synchronization Time

(only available after remapping)

- Description:

-

Time spent in OpenMP synchronization, whether barriers or mutual exclusion

via ordered sequentialization, critical sections, atomics or lock API calls.

- Unit:

- Seconds

- Parent metric:

- OpenMP Time

- Sub-metrics:

-

OpenMP Barrier Synchronization Time

OpenMP Critical Synchronization Time

OpenMP Lock API Synchronization Time

OpenMP Ordered Synchronization Time

OpenMP Taskwait Synchronization Time

OpenMP Barrier Synchronization Time

(only available after remapping)

- Description:

-

Time spent in implicit (compiler-generated) or explicit (user-specified)

OpenMP barrier synchronization. Note that during measurement implicit

barriers are treated similar to explicit ones. The instrumentation

procedure replaces an implicit barrier with an explicit barrier enclosed

by the parallel construct. This is done by adding a nowait

clause and a barrier directive as the last statement of the parallel

construct. In cases where the implicit barrier cannot be removed (i.e.,

parallel region), the explicit barrier is executed in front of the

implicit barrier, which will then be negligible because the thread team

will already be synchronized when reaching it. The synthetic explicit

barrier appears as a special implicit barrier construct.

- Unit:

- Seconds

- Parent metric:

- OpenMP Synchronization Time

- Sub-metrics:

-

OpenMP Explicit Barrier Synchronization Time

OpenMP Implicit Barrier Synchronization Time

OpenMP Explicit Barrier Synchronization Time

(only available after remapping)

- Description:

-

Time spent in explicit (i.e., user-specified) OpenMP barrier

synchronization, both waiting for other threads Wait at Explicit OpenMP Barrier Time

and inherent barrier processing overhead.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly barrier synchronizations and determine whether

they are necessary to ensure correctness or could be safely removed

(based on algorithm analysis). Consider replacing an explicit barrier

with a potentially more efficient construct, such as a critical section

or atomic, or use explicit locks. Examine the time that each thread

spends waiting at each explicit barrier, and try to re-distribute

preceding work to improve load balance.

- Parent metric:

- OpenMP Barrier Synchronization Time

- Sub-metrics:

-

Wait at Explicit OpenMP Barrier Time

Wait at Explicit OpenMP Barrier Time

- Description:

-

Time spent in explicit (i.e., user-specified) OpenMP barrier

synchronization waiting for the last thread.

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time at barriers can be an indication of load

imbalance. Examine the waiting times for each thread and try to

distribute the preceding computation from threads with the shortest

waiting times to those with the longest waiting times.

- Parent metric:

- OpenMP Explicit Barrier Synchronization Time

- Sub-metrics:

-

None

OpenMP Implicit Barrier Synchronization Time

(only available after remapping)

- Description:

-

Time spent in implicit (i.e., compiler-generated) OpenMP barrier

synchronization, both waiting for other threads Wait at Implicit OpenMP Barrier Time

and inherent barrier processing overhead.

- Unit:

- Seconds

- Diagnosis:

-

Examine the time that each thread spends waiting at each implicit

barrier, and if there is a significant imbalance then investigate

whether a schedule clause is appropriate. Note that

dynamic and guided schedules may require more

OpenMP Thread Management Time than static schedules. Consider whether

it is possible to employ the nowait clause to reduce the

number of implicit barrier synchronizations.

- Parent metric:

- OpenMP Barrier Synchronization Time

- Sub-metrics:

-

Wait at Implicit OpenMP Barrier Time

Wait at Implicit OpenMP Barrier Time

- Description:

-

Time spent in implicit (i.e., compiler-generated) OpenMP barrier

synchronization.

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time at barriers can be an indication of load

imbalance. Examine the waiting times for each thread and try to

distribute the preceding computation from threads with the shortest

waiting times to those with the longest waiting times.

- Parent metric:

- OpenMP Implicit Barrier Synchronization Time

- Sub-metrics:

-

None

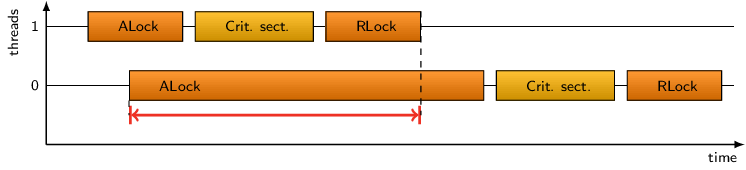

OpenMP Critical Synchronization Time

(only available after remapping)

- Description:

-

Time spent waiting to enter OpenMP critical sections and in atomics,

where mutual exclusion restricts access to a single thread at a time.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly critical sections and atomics and determine

whether they are necessary to ensure correctness or could be safely

removed (based on algorithm analysis).

- Parent metric:

- OpenMP Synchronization Time

- Sub-metrics:

-

OpenMP Critical Contention Time

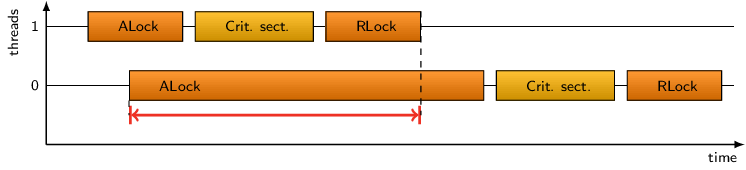

OpenMP Critical Contention Time

- Description:

-

The time lost waiting before entering a critical section while another

thread is still inside the section.

-

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time can be an indication of too much balance,

since all threads arrive at the critical almost at the same time. Examine

the waiting times for each thread and try to distribute the preceding

computation on the threads to allow a staggered arrival at the critical

section.

- Parent metric:

- OpenMP Critical Synchronization Time

- Sub-metrics:

-

None

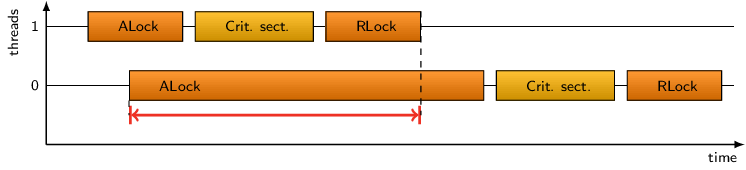

OpenMP Lock API Synchronization Time

(only available after remapping)

- Description:

-

Time spent in OpenMP lock API calls.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly usage of locks and determine whether they are

necessary to ensure correctness or could be safely removed (based on

algorithm analysis). Consider re-writing the algorithm to use lock-free

data structures.

- Parent metric:

- OpenMP Synchronization Time

- Sub-metrics:

-

OpenMP Lock API Contention Time

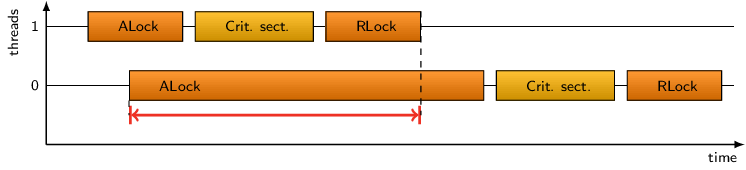

OpenMP Lock API Contention Time

- Description:

-

The time lost waiting for an explicit lock to be acquired while another

thread still holds the corresponding lock.

-

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time can be an indication of too much balance,

since all threads try to acquire the lock almost at the same time. Examine

the waiting times for each thread and try to distribute the preceding

computation on the threads to allow a staggered arrival at the lock.

- Parent metric:

- OpenMP Lock API Synchronization Time

- Sub-metrics:

-

None

OpenMP Ordered Synchronization Time

(only available after remapping)

- Description:

-

Time spent waiting to enter OpenMP ordered regions due to enforced

sequentialization of loop iteration execution order in the region.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly ordered regions and determine

whether they are necessary to ensure correctness or could be safely

removed (based on algorithm analysis).

- Parent metric:

- OpenMP Synchronization Time

- Sub-metrics:

-

None

OpenMP Taskwait Synchronization Time

(only available after remapping)

- Description:

-

Time spent in OpenMP taskwait directives, waiting for child tasks

to finish.

- Unit:

- Seconds

- Parent metric:

- OpenMP Synchronization Time

- Sub-metrics:

-

None

OpenMP Flush Time

(only available after remapping)

- Description:

-

Time spent in OpenMP flush directives.

- Unit:

- Seconds

- Parent metric:

- OpenMP Time

- Sub-metrics:

-

None

POSIX Threads Time

(only available after remapping)

- Description:

-

Time spent in instrumented POSIX threads API calls. In particular, this

includes thread management and synchronization activities.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree to determine which classes of POSIX thread

activities contribute the most time.

- Parent metric:

- Execution Time

- Sub-metrics:

-

POSIX Threads Management Time

POSIX Threads Synchronization Time

POSIX Threads Management Time

(only available after remapping)

- Description:

-

Time spent managing (i.e., creating, joining, cancelling, etc.) POSIX

threads.

- Unit:

- Seconds

- Diagnosis:

-

Excessive POSIX threads management time in pthread_join

indicates load imbalance which causes wait states in the joining

threads waiting for the other thread to finish. Examine the join

times and try to re-distribute the computation in the corresponding

worker threads to achieve a better load balance.

-

Also, correlate the thread management time to the Visits of

management routines. If visit counts are high, consider using a

thread pool to reduce the number of thread management operations.

- Parent metric:

- POSIX Threads Time

- Sub-metrics:

-

None

POSIX Threads Synchronization Time

(only available after remapping)

- Description:

-

Time spent in POSIX threads synchronization calls, i.e., mutex and

condition variable operations.

- Unit:

- Seconds

- Diagnosis:

-

Expand the metric tree further to determine the proportion of time in

different classes of POSIX thread synchronization operations. Expand the

calltree to identify which callpaths are responsible for the most

synchronization time. Also examine the distribution of synchronization

time on each participating thread for indication of lock contention

effects.

- Parent metric:

- POSIX Threads Time

- Sub-metrics:

-

POSIX Threads Mutex API Synchronization Time

POSIX Threads Condition API Synchronization Time

POSIX Threads Mutex API Synchronization Time

(only available after remapping)

- Description:

-

Time spent in POSIX threads mutex API calls.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly usage of mutex operations and determine whether

they are necessary to ensure correctness or could be safely removed

(based on algorithm analysis). Consider re-writing the algorithm to

use lock-free data structures.

- Parent metric:

- POSIX Threads Synchronization Time

- Sub-metrics:

-

POSIX Threads Mutex Contention Time

POSIX Threads Mutex Contention Time

- Description:

-

Time lost waiting for a mutex lock to be acquired while another thread

still holds the corresponding lock.

-

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time in mutex locks can be an indication of too

much balance, since many threads try to acquire locks almost at the same

time. Examine the waiting times for each thread and try to distribute

the preceding computation on the threads to allow a staggered arrival at

the lock API call.

- Parent metric:

- POSIX Threads Mutex API Synchronization Time

- Sub-metrics:

-

None

POSIX Threads Condition API Synchronization Time

(only available after remapping)

- Description:

-

Time spent in POSIX threads condition API calls.

- Unit:

- Seconds

- Diagnosis:

-

Locate the most costly usage of condition operations and determine whether

they are necessary to ensure correctness or could be safely removed (based

on algorithm analysis). Consider re-writing the algorithm to use data

structures without the need for condition variables.

- Parent metric:

- POSIX Threads Synchronization Time

- Sub-metrics:

-

POSIX Threads Condition Contention Time

POSIX Threads Condition Contention Time

- Description:

-

Time lost waiting for a mutex lock to be acquired in a condition API

call while another thread still holds the corresponding lock.

-

- Unit:

- Seconds

- Diagnosis:

-

A large amount of waiting time in condition operations can be an indication

of imbalance. Examine the waiting times for each thread and try to

distribute the preceding computation, in particular the work of the

threads responsible for fulfilling the condition.

- Parent metric:

- POSIX Threads Condition API Synchronization Time

- Sub-metrics:

-

None

OpenACC Time

(only available after remapping)

- Description:

-

Time spent in the OpenACC run-time system, API calls and on device.

If the OpenACC implementation is based on CUDA, and OpenACC and CUDA

support are both enabled during measurement, the CUDA activities from

within OpenACC will be accounted separately (just like CUDA calls

within MPI and other metric hierarchies).

- Unit:

- Seconds

- Parent metric:

- Execution Time

- Sub-metrics:

-

OpenACC Initialization/Finalization Time

OpenACC Memory Management Time

OpenACC Synchronization Time

OpenACC Kernel Launch Time

OpenACC Initialization/Finalization Time

(only available after remapping)

- Description:

-

Time needed to initialize and finalize OpenACC and OpenACC kernels.

- Unit:

- Seconds

- Parent metric:

- OpenACC Time

- Sub-metrics:

-

None

OpenACC Memory Management Time

(only available after remapping)

- Description:

-

Time spent on memory management including data transfer from host to

device and vice versa.

- Unit:

- Seconds

- Parent metric:

- OpenACC Time

- Sub-metrics:

-

None

OpenACC Synchronization Time

(only available after remapping)

- Description:

-

Time spent on OpenACC synchronization.

- Unit:

- Seconds

- Parent metric:

- OpenACC Time

- Sub-metrics:

-

None

OpenACC Kernel Launch Time

(only available after remapping)

- Description:

-

Time spent to launch OpenACC kernels.

- Unit:

- Seconds

- Parent metric:

- OpenACC Time

- Sub-metrics:

-

None

OpenCL Kernel Time

(only available after remapping)

- Description:

-

Time spent executing OpenCL kernels.

- Unit:

- Seconds

- Parent metric:

- Computation Time

- Sub-metrics:

-

None

OpenCL Time

(only available after remapping)

- Description:

-

Time spent in the OpenCL run-time system, API and on device.

- Unit:

- Seconds

- Parent metric:

- Execution Time

- Sub-metrics:

-

OpenCL General Management Time

OpenCL Memory Management Time

OpenCL Synchronization Time

OpenCL Kernel Launch Time

OpenCL General Management Time

(only available after remapping)

- Description:

-

Time needed for general OpenCL setup, e.g. initialization, device and

event control, etc.

- Unit:

- Seconds

- Parent metric:

- OpenCL Time

- Sub-metrics:

-

None

OpenCL Memory Management Time

(only available after remapping)

- Description:

-

Time spent on memory management including data transfer from host to

device and vice versa.

- Unit:

- Seconds

- Parent metric:

- OpenCL Time

- Sub-metrics:

-

None

OpenCL Synchronization Time

(only available after remapping)

- Description:

-

Time spent on OpenCL synchronization.

- Unit:

- Seconds

- Parent metric:

- OpenCL Time

- Sub-metrics:

-

None

OpenCL Kernel Launch Time

(only available after remapping)

- Description:

-

Time spent to launch OpenCL kernels.

- Unit:

- Seconds

- Parent metric:

- OpenCL Time

- Sub-metrics:

-

None

CUDA Kernel Time

(only available after remapping)

- Description:

-

Time spent executing CUDA kernels.

- Unit:

- Seconds

- Parent metric:

- Computation Time

- Sub-metrics:

-

None

CUDA Time

(only available after remapping)

- Description:

-

Time spent in the CUDA run-time system, API calls and on device.

- Unit:

- Seconds

- Parent metric:

- Execution Time

- Sub-metrics:

-

CUDA General Management Time

CUDA Memory Management Time

CUDA Synchronization Time

CUDA Kernel Launch Time

CUDA General Management Time

(only available after remapping)

- Description:

-

Time needed for general CUDA setup, e.g. initialization, control of

version, device, primary context, context, streams, events, occupancy,

etc.

- Unit:

- Seconds

- Parent metric:

- CUDA Time

- Sub-metrics:

-

None

CUDA Memory Management Time

(only available after remapping)

- Description:

-

Time spent on memory management including data transfer from host to

device and vice versa. Note that "memset" operations are considered

in CUDA Kernel Launch Time.

- Unit:

- Seconds

- Parent metric:

- CUDA Time

- Sub-metrics:

-

None

CUDA Synchronization Time

(only available after remapping)

- Description:

-

Time spent on CUDA synchronization.

- Unit:

- Seconds

- Parent metric:

- CUDA Time

- Sub-metrics:

-

None

CUDA Kernel Launch Time

(only available after remapping)

- Description:

-

Time spent to launch CUDA kernels, including "memset" operations.

- Unit:

- Seconds

- Parent metric:

- CUDA Time

- Sub-metrics:

-

None

HIP Kernel Time

(only available after remapping)

- Description:

-

Time spent executing HIP kernels.

- Unit:

- Seconds

- Parent metric:

- Computation Time

- Sub-metrics:

-

None

HIP Time

(only available after remapping)

- Description:

-

Time spent in the HIP API calls.

- Unit:

- Seconds

- Parent metric:

- Execution Time

- Sub-metrics:

-

HIP Stream Management Time

HIP Memory Allocation Time

HIP Memory Transfer Time

HIP Synchronization Time

HIP Kernel Launch Time

HIP Stream Management Time

(only available after remapping)

- Description:

-

Time needed for HIP stream management.

- Unit:

- Seconds

- Parent metric:

- HIP Time

- Sub-metrics:

-

None

HIP Memory Allocation Time

(only available after remapping)

- Description:

-

Time needed for HIP memory allocations.

- Unit:

- Seconds

- Parent metric:

- HIP Time

- Sub-metrics:

-

None

HIP Memory Transfer Time

(only available after remapping)

- Description:

-

Time needed for HIP memory transfers.

- Unit:

- Seconds

- Parent metric:

- HIP Time

- Sub-metrics:

-

None

HIP Synchronization Time

(only available after remapping)

- Description:

-

Time spent on HIP synchronization.

- Unit:

- Seconds

- Parent metric:

- HIP Time

- Sub-metrics:

-

None

HIP Kernel Launch Time

(only available after remapping)

- Description:

-

Time spent to launch HIP kernels.

- Unit:

- Seconds

- Parent metric:

- HIP Time

- Sub-metrics:

-

None

MPI Synchronization Operations

(only available after remapping)

- Description:

-

Provides the total number of MPI synchronization operations

that were executed. This not only includes barrier calls, but also

communication operations which transfer no data (i.e., zero-sized

messages are considered to be used for coordination synchronization).

- Unit:

- Counts

- Parent metric:

- None

- Sub-metrics:

-

MPI Point-to-point Synchronization Operations

MPI Collective Synchronizations

MPI One-sided Synchronization Operations

MPI Point-to-point Synchronization Operations

(only available after remapping)

- Description:

-

Total number of MPI point-to-point synchronization operations, i.e.,

point-to-point transfers of zero-sized messages used for coordination.

- Unit:

- Counts

- Diagnosis:

-

Locate the most costly synchronizations and determine whether they are

necessary to ensure correctness or could be safely removed (based on

algorithm analysis).

- Parent metric:

- MPI Synchronization Operations

- Sub-metrics:

-

MPI Point-to-point Send Synchronization Operations

MPI Point-to-point Receive Synchronization Operations

MPI Point-to-point Send Synchronization Operations

- Description:

-

Number of MPI point-to-point synchronization operations sending

a zero-sized message.

- Unit:

- Counts

- Parent metric:

- MPI Point-to-point Synchronization Operations

- Sub-metrics:

-

MPI Late Receiver Instances (Synchronizations)

MPI Point-to-point Receive Synchronization Operations

- Description:

-

Number of MPI point-to-point synchronization operations receiving

a zero-sized message.

- Unit:

- Counts

- Parent metric:

- MPI Point-to-point Synchronization Operations

- Sub-metrics:

-

MPI Late Sender Instances (Synchronizations)

MPI Collective Synchronizations

- Description:

-

The number of MPI collective synchronization operations. This

does not only include barrier calls, but also calls to collective

communication operations that are neither sending nor receiving any

data. Each process participating in the operation is counted, as

defined by the associated MPI communicator.

- Unit:

- Counts

- Diagnosis:

-

Locate synchronizations with the largest MPI Collective Synchronization Time and

determine whether they are necessary to ensure correctness or could be

safely removed (based on algorithm analysis). Collective communication

operations that neither send nor receive data, yet are required for

synchronization, can be replaced with the more efficient

MPI_Barrier.

- Parent metric:

- MPI Synchronization Operations

- Sub-metrics:

-

None

MPI One-sided Synchronization Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided synchronization operations.

- Unit:

- Counts

- Parent metric:

- MPI Synchronization Operations

- Sub-metrics:

-

MPI One-sided Active Target Synchronization Operations

MPI One-sided Passive Target Synchronization Operations

MPI One-sided Active Target Synchronization Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided active target synchronization operations.

- Unit:

- Counts

- Parent metric:

- MPI One-sided Synchronization Operations

- Sub-metrics:

-

None

MPI One-sided Passive Target Synchronization Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided passive target synchronization operations.

- Unit:

- Counts

- Parent metric:

- MPI One-sided Synchronization Operations

- Sub-metrics:

-

None

MPI Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI communication operations, excluding calls transferring

no payload data (which are considered MPI Synchronization Operations).

- Unit:

- Counts

- Parent metric:

- None

- Sub-metrics:

-

MPI Point-to-point Communication Operations

MPI Collective Communications

MPI One-sided Communication Operations

MPI Point-to-point Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI point-to-point communication operations, excluding

calls transferring zero-sized messages.

- Unit:

- Counts

- Parent metric:

- MPI Communication Operations

- Sub-metrics:

-

MPI Point-to-point Send Communication Operations

MPI Point-to-point Receive Communication Operations

MPI Point-to-point Send Communication Operations

- Description:

-

Number of MPI point-to-point send operations, excluding calls transferring

zero-sized messages.

- Unit:

- Counts

- Parent metric:

- MPI Point-to-point Communication Operations

- Sub-metrics:

-

MPI Late Receiver Instances (Communications)

MPI Point-to-point Receive Communication Operations

- Description:

-

Number of MPI point-to-point receive operations, excluding calls

transferring zero-sized messages.

- Unit:

- Counts

- Parent metric:

- MPI Point-to-point Communication Operations

- Sub-metrics:

-

MPI Late Sender Instances (Communications)

MPI Collective Communications

(only available after remapping)

- Description:

-

The number of MPI collective communication operations, excluding

calls neither sending nor receiving any data. Each process participating

in the operation is counted, as defined by the associated MPI communicator.

- Unit:

- Counts

- Diagnosis:

-

Locate operations with the largest MPI Collective Communication Time and compare

MPI Collective Bytes Transferred. Where multiple collective operations of the same type

are used in series with single values or small payloads, aggregation may

be beneficial in amortizing transfer overhead.

- Parent metric:

- MPI Communication Operations

- Sub-metrics:

-

MPI Collective Exchange Communications

MPI Collective Communications as Source

MPI Collective Communications as Destination

MPI Collective Exchange Communications

- Description:

-

The number of MPI collective communication operations which are both

sending and receiving data. In addition to all-to-all and scan operations,

root processes of certain collectives transfer data from their source to

destination buffer.

- Unit:

- Counts

- Parent metric:

- MPI Collective Communications

- Sub-metrics:

-

None

MPI Collective Communications as Source

- Description:

-

The number of MPI collective communication operations that are

only sending but not receiving data. Examples are the non-root

processes in gather and reduction operations.

- Unit:

- Counts

- Parent metric:

- MPI Collective Communications

- Sub-metrics:

-

None

MPI Collective Communications as Destination

- Description:

-

The number of MPI collective communication operations that are

only receiving but not sending data. Examples are broadcasts

and scatters (for ranks other than the root).

- Unit:

- Counts

- Parent metric:

- MPI Collective Communications

- Sub-metrics:

-

None

MPI One-sided Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided communication operations.

- Unit:

- Counts

- Parent metric:

- MPI Communication Operations

- Sub-metrics:

-

MPI One-sided Put Communication Operations

MPI One-sided Get Communication Operations

MPI One-sided Atomic Communication Operations

MPI One-sided Put Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided put communication operations.

- Unit:

- Counts

- Parent metric:

- MPI One-sided Communication Operations

- Sub-metrics:

-

None

MPI One-sided Get Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided get communication operations.

- Unit:

- Counts

- Parent metric:

- MPI One-sided Communication Operations

- Sub-metrics:

-

None

MPI One-sided Atomic Communication Operations

(only available after remapping)

- Description:

-

Total number of MPI one-sided atomic communication operations.

- Unit:

- Counts

- Parent metric:

- MPI One-sided Communication Operations

- Sub-metrics:

-

None

MPI Bytes Transferred

(only available after remapping)

- Description:

-

The total number of bytes that were notionally processed in MPI

communication and synchronization operations (i.e., the sum of the bytes

that were sent and received). Note that the actual number of bytes

transferred is typically not determinable, as this is dependant on the MPI

internal implementation, including message transfer and failed delivery

recovery protocols.

- Unit:

- Bytes

- Diagnosis:

-

Expand the metric tree to break down the bytes transferred into

constituent classes. Expand the call tree to identify where most data

is transferred and examine the distribution of data transferred by each

process.

- Parent metric:

- None

- Sub-metrics:

-

MPI Point-to-point Bytes Transferred

MPI Collective Bytes Transferred

MPI One-Sided Bytes Transferred

MPI Point-to-point Bytes Transferred

(only available after remapping)

- Description:

-

The total number of bytes that were notionally processed by

MPI point-to-point communication operations.

- Unit:

- Bytes

- Diagnosis:

-

Expand the calltree to identify where the most data is transferred

using point-to-point communication and examine the distribution of data

transferred by each process. Compare with the number of MPI Point-to-point Communication Operations

and resulting MPI Point-to-point Communication Time.

-

Average message size can be determined by dividing by the number of MPI

MPI Point-to-point Communication Operations (for all call paths or for particular call paths or

communication operations). Instead of large numbers of small

communications streamed to the same destination, it may be more

efficient to pack data into fewer larger messages (e.g., using MPI

datatypes). Very large messages may require a rendezvous between

sender and receiver to ensure sufficient transmission and receipt

capacity before sending commences: try splitting large messages into

smaller ones that can be transferred asynchronously and overlapped with

computation. (Some MPI implementations allow tuning of the rendezvous

threshold and/or transmission capacity, e.g., via environment

variables.)

- Parent metric:

- MPI Bytes Transferred

- Sub-metrics:

-

MPI Point-to-point Bytes Sent

MPI Point-to-point Bytes Received

MPI Point-to-point Bytes Sent

- Description:

-

The number of bytes that were notionally sent using MPI

point-to-point communication operations.

- Unit:

- Bytes

- Diagnosis:

-

Expand the calltree to see where the most data is sent using

point-to-point communication operations and examine the distribution of

data sent by each process. Compare with the number of MPI Point-to-point Send Communication Operations

and resulting MPI Point-to-point Communication Time.

-

If the aggregate MPI Point-to-point Bytes Received is less than the amount

sent, some messages were cancelled, received into buffers which were

too small, or simply not received at all. (Generally only aggregate

values can be compared, since sends and receives take place on

different callpaths and on different processes.) Sending more data than

is received wastes network bandwidth. Applications do not conform to

the MPI standard when they do not receive all messages that are sent,

and the unreceived messages degrade performance by consuming network

bandwidth and/or occupying message buffers. Cancelling send operations

is typically expensive, since it usually generates one or more internal

messages.

- Parent metric:

- MPI Point-to-point Bytes Transferred

- Sub-metrics:

-

None

MPI Point-to-point Bytes Received

- Description:

-

The number of bytes that were notionally received using MPI

point-to-point communication operations.

- Unit:

- Bytes

- Diagnosis:

-

Expand the calltree to see where the most data is received using

point-to-point communication and examine the distribution of data

received by each process. Compare with the number of MPI Point-to-point Receive Communication Operations

and resulting MPI Point-to-point Communication Time.

-

If the aggregate MPI Point-to-point Bytes Sent is greater than the amount

received, some messages were cancelled, received into buffers which

were too small, or simply not received at all. (Generally only

aggregate values can be compared, since sends and receives take place

on different callpaths and on different processes.) Applications do

not conform to the MPI standard when they do not receive all messages

that are sent, and the unreceived messages degrade performance by

consuming network bandwidth and/or occupying message buffers.

Cancelling receive operations may be necessary where speculative

asynchronous receives are employed, however, managing the associated

requests also involves some overhead.

- Parent metric:

- MPI Point-to-point Bytes Transferred

- Sub-metrics:

-

None

MPI Collective Bytes Transferred

(only available after remapping)

- Description:

-

The total number of bytes that were notionally processed in

MPI collective communication operations. This assumes that collective

communications are implemented naively using point-to-point

communications, e.g., a broadcast being implemented as sends to each

member of the communicator (including the root itself). Note that

effective MPI implementations use optimized algorithms and/or special

hardware, such that the actual number of bytes transferred may be very

different.

- Unit:

- Bytes

- Diagnosis:

-

Expand the calltree to see where the most data is transferred using